'Sadbait': Why algorithms, audiences and creators love to cry online

Serenity Strull/Getty Images

Serenity Strull/Getty ImagesPeople say they don't want to be sad, but their online habits disagree. Content that channels sorrow for views has become a ubiquitous part of online culture. Why does it work?

Whether you're a state-sponsored misinformation campaign, a content creator trying to make it big or a company trying to sell a product, there's one proven way to win followers and earn money online: make people feel something.

Social media platforms have been called out for incentivising creators to make their audiences angry. But these critiques tend to focus on content designed to infuriate people into engaging with a post, often called "ragebait". It's earned considerable scrutiny and even shouldered some of the blame for political polarisation in recent years – but rage isn't the only emotion that leads users to linger in a comments section or repost a video.

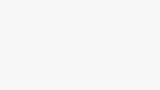

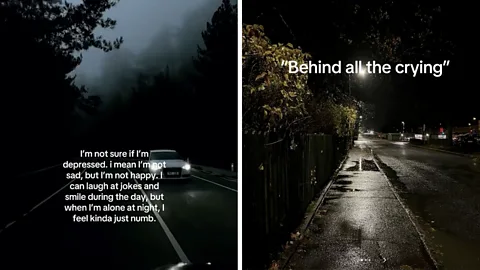

The internet is flooded with what some call 'sadbait'. It gets far less attention, but some of today's most successful online content is melancholy and melodramatic. Influencers film themselves crying. Scam artists lure their victims with stories of hard luck. In 2024, TikTokers racked up hundreds of millions of views with a forlorn genre of videos called "Corecore", where collages of depressing movie and news clips lay over a bed of depressing music. Sadness is a feeling people might think they want to avoid, but gloomy, dark and even distressing posts seem to do surprisingly well with both humans and the algorithms that cater to them. The success of sadbait can tell us a lot about both the internet, and ourselves.

Courtesy of @mpminds

Courtesy of @mpminds"Displays of any kind of strong emotion – anger, sadness, disgust or even laughing – hook viewers," says Soma Basu, an investigative journalist and researcher at Tampere University in Finland who studies how media spreads online. Creators know their audience is scrolling through an endless stream of videos they could be watching instead, so a clear and urgent emotional appeal can make them stay, she says. But according to Basu, there's something about images of grief, in particular, that can blur the lines between the audience and the content, creating the opportunity for a special kind of connection.

Sadbait doesn't always have to be sad for viewers, either. Another viral genre of sadbait on Instagram and TikTok features slideshows of AI-generated cats meeting heartbreaking ends – paired with a AI cover of Billie Eilish's melancholic What Was I Made For that replaces the lyrics with "meows". These miserable kittens became so popular that Eilish performed the meowed version of the song at Madison Square Garden in October, with the audience joyfully singing along.

Nor does sadbait have to depict real, feeling humans. In the spring of 2024, AI-generated images of wounded veterans and impoverished children dominated Facebook feeds, becoming some of the most interacted-with posts on the platform, according to researchers. When an AI image depicting an imaginary victim of Hurricane Helene went viral in the US, right wing influencers and even a Republican politician responded that "it doesn't matter" that the picture was fake because it resonated with people. Real or not, people love to be in their feelings.

Algorithms and audiences

Researchers analysing highly emotional content online, whether misinformation or memes, connect its success to the engagement-maximising goals of social media platforms. Their algorithms are tuned to boost posts that users spend the most time commenting on, looking at and sharing. The more a post draws a response, for whatever reason, the more likely others will see it.

It's logical enough – internet users, like movie audiences and book readers before them, respond to sad and sentimental content, and the algorithms reward it. On the big social media platforms, content creators are paid based on measurements of how long and deeply users engage with their posts. The best way to reach viewers is to appease the algorithm. Creators try to figure out what the machine will promote and make more of it – and the feedback loop continues.

Courtesy of @TechTakneek

Courtesy of @TechTakneekThere can be a cynicism to the techniques creators use to drum up views. But sadbait videos aren't just triggering emotions, they can offer an outlet to feel and explore them, according to Nina Lutz, a misinformation researcher working at the University of Washington.

"I don't think it's a response to the content itself," Lutz says, "but rather, the content serves as a space that may allow for people with enough common interests and experiences to come together".

Accounts on TikTok posting slideshows of blurry black-and-white photos of streetlamps with captions about depression get millions of views – with profiles that often say things like "dms are open if you need to talk". In the comments on obviously AI-generated pictures of crying children and wounded veterans, strangers share their very human-generated problems in frank detail. "I read comments and discussions about sexual assault, miscarriages, polio, abortion, loss of siblings and children, deep loneliness and crises of faith," Lutz says. "Heavy and sad stuff. I had to step away from my computer several times."

Using posts as place to talk about problems is an old practice online. "When I was a teen this would happen a lot in fandom spaces or Tumblr, which were also public forums," Lutz says. "People are seeking connection and finding it in maybe unorthodox spots."

And, as users have conversations about their own lives in the comments of sadbait videos, it draws the eyeballs of observers and keeps the algorithm juiced.

Crying lessons

This kind of outlet is especially useful in a world where sadness can be taboo, says Basu.

Basu has studied a peculiar genre of depressing videos on Indian social media. In these so called "crying videos", Indian influencers lip-sync and weep along to reposted audio from movies or songs on TikTok. It became an entire category of viral content prior to the app's banning in that country in 2020, so successful you can even find instruction videos teaching creators how to make themselves cry for content. After the ban, many influencers who established their careers with viral crying videos migrated to Instagram Reels.

"[Crying videos] go viral because they don't fit into accepted social norms," Basu says. Seeing people express emotions that are usually only felt in private offers viewers "a rare and cherished access to something that is private, niche, hidden", she says.

This sort of digital intimacy might seem like voyeurism on the part of viewers and exhibitionism on the part of creators. But sad content also serves a deeper function, exposing and commenting on aspects of offline society, Basu says. "In their passionate display of emotions, [these videos] complicate and expose the crevice between different kinds of divisions – class, caste or ethnicity, gender, sexuality, literacy and so on."

Private account

Private accountThe transgression of showing emotion when you aren't supposed to, or sympathising with someone else from across a social divide, is part of what draws viewers into crying videos, Basu says. On TikTok videos, like in other kinds of art or performance, users and posters can play outside the usual lines of what social codes and expectations allow.

Another factor that makes sad content compelling to viewers is the continued rewriting of what it can mean. "New interpretations emerge as it circulates through different social groups. These videos foster diverse social connections," Basu says.

A crying video may begin as a teenage boy in his parents' house exploring what it feels like to weep in public, but it can turn into a video edit that an ironic poster mocks, an occasion for two older women in different parts of the world to connect over stories about their children and a thriving career as a luxury car-driving influencer for that same crying teenage boy – as is the case with Sagar Goswami, one of the Indian influencers that Basu studied.

But too often, Lutz says, experts and observers examine digital ecosystems as if people are unconscious participants in a giant, attention-crunching machine. There are important economic, psychological and technical forces steering the rivers of the internet, she says, but that doesn't mean users are oblivious.

"People get this. Everyday users understand the engagement economy," Lutz says. "We have to move away from this understanding of a digital public that doesn't know anything about these dynamics – they are sitting in them!"

More like this:

• Why TikTok's AI-generated cats could be the future of the internet

Online humans aren't like fish in a barrel – instead, they are savvy consumers who use the barrel, the water in it and the hooks dangled down as tools to accomplish their own goals, she says. This might be why a lot of the engagement with the sadbait content Lutz and other researchers study is "both ironic and sincere," she says.

In fact, one popular method of consuming this style of content serves as a comment on the content itself, according to Basu. Users share the sadbait and other maudlin posts to mock and laugh at it, or even gather it into compilations of so-called "cringe" content.

But for the algorithms curating the content, a like is a like, whether ironic or not. Many users understand the algorithms and content creators are out to manipulate them, Lutz says, and can recognise when a post is insincere.

But if users engage with a post, it's still successful, regardless of why or how they're doing it. Like anything else online, sadbait content might work for a simple reason: people want to see it.

--

For more technology news and insights, sign up to our Tech Decoded newsletter, while The Essential List delivers a handpicked selection of features and insights to your inbox twice a week.