Evolution explains why we act differently online

Getty Images

Getty ImagesNew research is revealing that trolls live inside all of us – but that there are ways to defeat them and build more cooperative digital societies.

One evening in February 2018, Professor Mary Beard posted on Twitter a photograph of herself crying. The eminent University of Cambridge classicist, who has almost 200,000 Twitter followers, was distraught: after making a comment about Haiti, she had received a storm of abuse online. She also tweeted: “I speak from the heart (and of cource [sic] I may be wrong). But the crap I get in response just isnt [sic] on; really it isn’t.”

In the days that followed, Beard received support from several high-profile people – even if not all of them agreed with her initial tweet. They were themselves then targeted. And when one of Beard’s critics, fellow Cambridge academic Priyamvada Gopal, a woman of Asian heritage, set out her response to Beard’s original tweet in an online article, she also received a torrent of abuse.

Women and members of ethnic minority groups are disproportionately the target of Twitter abuse, including death threats and threats of sexual violence. Where these identity markers intersect, the bullying can become particularly intense – as experienced by black female MP Diane Abbott, who alone received nearly half of all the abusive tweets sent to female MPs during the run-up to the 2017 UK general election. Black and Asian female MPs received on average 35% more abusive tweets than their white female colleagues even when Abbott was excluded from the total.

The constant abuse is silencing people, pushing them off online platforms and further reducing the diversity of online voices and opinion. And it shows no sign of abating. A survey last year found that 40% of American adults had experienced online abuse, with almost half of them receiving severe forms of harassment, including physical threats and stalking. Seventy per cent of women described online harassment as a “major problem”.

Alamy

AlamyThe internet offers unparalleled promise of cooperation and communication between all of humanity. But instead of embracing a massive extension of our social circles online, we seem to be reverting to tribalism and conflict.

While we generally conduct our real-life interactions with strangers politely and respectfully, online we can be horrible. Is there any way we can relearn the cooperation that enabled us to find common ground and thrive as a species?

~

“Don’t overthink it, just press the button!”

I click and quickly move on to the next question. We’re all playing against the clock. My teammates are far away and unknown to me, so I have no idea if we’re all in it together or whether I’m being played for a fool. But I know the others are depending on me.

This is a so-called public goods game at Yale University’s Human Cooperation Lab, which researchers use as a tool to help understand how and why we cooperate.

Over the years, scientists have proposed various theories about why humans cooperate enough to form strong societies. The evolutionary roots of our general niceness, most now believe, can be found in the survival advantage humans experience when we cooperate as a group.

Getty Images

Getty ImagesIn this game, I’m in a team of four people in different locations. Each of us is given the same amount of money. We are asked to choose how we will contribute to a group pot on the understanding that this pot will be doubled and split equally among us. Like all cooperation, this relies on a certain level of trust that the others in your group will be nice. If everybody in the group contributes all of their money, all the money gets doubled and redistributed four ways, so everyone doubles their money. Win–win!

“But if you think about it from the perspective of an individual,” says lab director David Rand, “for each dollar that you contribute, it gets doubled to two dollars and then split four ways – which means each person only gets 50 cents back for the dollar they contributed.”

In other words, even though everyone is better off collectively by contributing to a group project that no one could manage alone (in real life, this could be paying towards a hospital building, for example), there is a cost at the individual level. Financially, you make more money by being more selfish.

Rand’s team has run this game with thousands of players. Half of them are asked, as I was, to decide their contribution within 10 seconds. The other half are asked to take their time and carefully consider their decision. It turns out that when people go with their gut reaction, they are much more generous.

“There is a lot of evidence that cooperation is a central feature of human evolution,” says Rand. “In the small-scale societies that our ancestors were living in, all our interactions were with people that you were going to see again and interact with in the immediate future.” That kept in check any temptation to act aggressively or take advantage and free-ride off other people’s contributions.

So rather than work out every time whether it’s in our long-term interests to be nice, it’s more efficient and less effort to have the basic rule: be nice to other people. That’s why our unthinking response in the experiment is a generous one.

But our learned behaviours also can change.

Usually, those in Rand’s experiment who play the quickfire round are generous and receive generous dividends, reinforcing their generous outlook. But those who consider their decisions for longer are more selfish. This results in a meagre group pot, reinforcing an idea that it doesn’t pay to rely on the group.

In a further experiment, Rand gave money to people who had played one round of the game. They were asked how much they wanted to give to an anonymous stranger. This time, there was no incentive; they would be acting entirely charitably.

The people who had got used to cooperating in the first stage gave twice as much money in the second stage as the people who had got used to being selfish.

“We’re affecting people’s internal lives and behaviour,” Rand says. “The way they behave even when no-one’s watching and when there’s no institution in place to punish or reward them.”

Getty Images

Getty ImagesRand’s team also has tested how people in different countries play the game to see how the strength of social institutions – such as government, family, education and legal systems – influences behaviour. In Kenya, where public sector corruption is high, players initially gave less generously to the stranger than players in the US, which has less corruption. This suggests that people who can rely on relatively fair social institutions behave in a more public-spirited way than those whose institutions are less reliable. However, after playing just one round of the cooperation-promoting version of the public goods game, the Kenyans’ generosity equalled that of their US counterparts. And it cut both ways: Americans trained to be selfish gave a lot less.

So there may be something about online social media culture that can encourage mean behaviour. Unlike hunter-gatherer societies, which rely on cooperation to survive and have rules for sharing food, for example, social media has weak institutions. It offers physical distance, relative anonymity and little reputational or punitive risk for bad behaviour. If you’re mean, no-one you know is going to see.

On the flip side, you can choose to broadcast an opinion that benefits your standing with your social group. At Yale’s Crockett Lab, for instance, researchers study how social emotions are transformed online – in particular moral outrage. Brain-imaging studies show that when people act on their moral outrage (in the offline world, confronting someone who allows their dog to foul a playground, for instance), their brain’s reward centre is activated: they feel good about it. This reinforces their behaviour so they are more likely to intervene in a similar way again. And while challenging a violator of your community’s social norms has risks – you may get attacked – it also boosts your reputation.

Those of us fortunate enough to have relatively peaceful lives are rarely faced with truly outrageous behaviour. As a result, we rarely see moral outrage expressed. But open up Twitter or Facebook and you get a very different picture. Recent research shows that messages with both moral and emotional words are more likely to spread on social media – each moral or emotional word in a tweet increases the likelihood of it being retweeted by 20%.

Getty Images

Getty Images“Content that triggers outrage and that expresses outrage is much more likely to be shared,” says lab director Molly Crockett. What we’ve created online is “an ecosystem that selects for the most outrageous content, paired with a platform where it’s easier than ever before to express outrage”.

Unlike in the offline world, there is little or no personal risk in confronting and exposing someone. And it feeds itself. “If you punish somebody for violating a norm, that makes you seem more trustworthy to others, so you can broadcast your moral character by expressing outrage and punishing social norm violations,” Crockett says.

“When you go from offline – where you might boost your reputation for whoever happens to be standing around at the moment – to online, where you broadcast it to your entire social network, then that dramatically amplifies the personal rewards of expressing outrage.”

This is compounded by the positive feedback such as ‘likes’. As a result, the platforms help people form habits of expressing outrage into a habit. “And a habit is something that’s done without regard to its consequences,” Crockett points out.

Alamy

AlamyOn the upside, online moral outrage has allowed marginalised, less-empowered groups to promote causes that traditionally have been harder to advance. It played an important role in focusing attention on the sexual abuse of women by high-status men. And in February 2018, Florida teens railing on social media against another school shooting helped to shift public opinion.

“I think that there must be ways to maintain the benefits of the online world,” says Crockett, “while thinking more carefully about redesigning these interactions to do away with some of the more costly bits.”

~

The good news is that it may only take a few people to alter the culture of a whole network.

At Yale’s Human Nature Lab, Nicholas Christakis and his team explore ways to identify these individuals and enlist them in public health programmes that could benefit the community. In Honduras, they are using this approach to influence vaccination enrolment and maternal care, for example. Online, such people have the potential to turn a bullying culture into a supportive one.

Creative Commons

Creative CommonsCorporations already use a crude system of identifying so-called Instagram influencers to advertise their brands. But Christakis is looking not just at how popular an individual is, but how they fit into a given network. In a small isolated village, for example, everyone is closely connected and you’re likely to know everyone at a party. In a city, by contrast, people may be living more close by as a whole, but you are less likely to know everyone at a party there. How thoroughly interconnected a network is affects how behaviours and information spread around it.

To explore this, Christakis has designed software that creates temporary artificial societies online. “We drop people in and then we let them interact with each other and see how they play a public goods game, for example, to assess how kind they are to other people.”

Then he manipulates the network. “By engineering their interactions one way, I can make them really sweet to each other, work well together, and they are healthy and happy and they cooperate. Or you take the same people and connect them a different way and they’re mean jerks to each other.”

Getty Images

Getty ImagesIn one experiment, he randomly assigned strangers to play the public goods game with each other. In the beginning, he says, about two-thirds of people were cooperative. “But some of the people they interact with will take advantage of them and, because their only option is either to be kind and cooperative or to be a defector, they choose to defect because they’re stuck with these people taking advantage of them. And by the end of the experiment everyone is a jerk to everyone else.”

Christakis turned this around simply by giving each person a little bit of control over who they were connected to after each round. They had to decide whether to be kind to their neighbours and whether to stick with them, or not. The only thing each one knew was whether another player had cooperated or defected in the round before. “What we were able to show is that people cut ties to defectors and form ties to cooperators, and the network rewired itself.” In other words, a cooperative prosocial structure instead of an uncooperative structure.

In an attempt to generate more cooperative online communities, Christakis’s team has started adding bots to their temporary societies. His team is not interested in inventing super-smart AI to replace human cognition, but in infiltrating a population of smart humans with ‘dumb-bots’ to help the humans help themselves.

In fact, Christakis found that if the bots played perfectly, that didn’t help the humans. But if the bots made some mistakes, they unlocked the potential of the group to find a solution. In other words, adding a little noise into the system, the bots helped the network to function more efficiently.

A version of this model could involve infiltrating the newsfeeds of partisan people with occasional items offering a different perspective, helping to shift people out of their social media comfort-bubbles and allow society as a whole to cooperate more.

WOCinTech/Creative Commons

WOCinTech/Creative CommonsBots may offer a solution to another online problem: much antisocial behaviour online stems from the anonymity of internet interactions.

One experiment found that the level of racist abuse tweeted at black users could be dramatically slashed by using bot accounts with white profile images to respond to racist tweeters. A typical bot response to a racist tweet would be: “Hey man, just remember that there are real people who are hurt when you harass them with that kind of language.” Simply cultivating a little empathy in such tweeters reduced their racist tweets almost to zero for weeks afterwards.

Another way of addressing the low reputational cost for bad behaviour online is to engineer a form of social punishment. The game company League of Legends introduced a “Tribunal” feature, in which negative play is punished by other players. The company reported that 280,000 players were “reformed” after such punishment in one year, achieving a positive standing in the community. Developers could also build in social rewards for good behaviour, encouraging more cooperative elements that help build relationships.

Getty Images

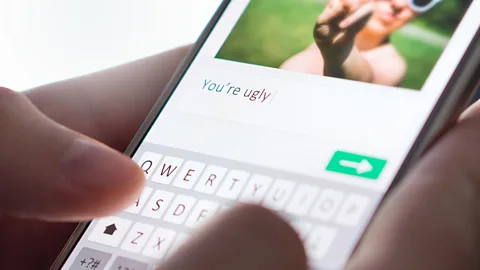

Getty ImagesResearchers already are learning how to predict when an exchange is about to turn bad – the moment at which it could benefit from pre-emptive intervention. “You might think that there is a minority of sociopaths online, which we call trolls, who are doing all this harm,” says Cristian Danescu-Niculescu-Mizil at Cornell University’s Department of Information Science. “What we actually find in our work is that ordinary people, just like you and me, can engage in such antisocial behaviour. For a specific period of time, you can actually become a troll. And that’s surprising.”

Danescu-Niculescu-Mizil has been investigating the comments sections below online articles. He identifies two main triggers for trolling: the context of the exchange (how other users are behaving) and your mood. “If you’re having a bad day, or if it happens to be Monday, for example, you’re much more likely to troll in the same situation,” he says. “You’re nicer on a Saturday morning.”

After collecting data, including from people who had trolled others in the past, Danescu-Niculescu-Mizil built an algorithm that predicts with 80% accuracy when someone is about to become abusive online. This provides an opportunity to, for example, introduce a delay in how fast they can post their response. If people have to think twice before they write something, that improves the context of the exchange for everyone: you’re less likely to witness people misbehaving, and so less likely to misbehave yourself.

Getty Images

Getty ImagesBut in spite of the horrible behaviour many of us have experienced online, the majority of interactions are cooperative and justified moral outrage is usefully employed in challenging hateful tweets. A recent British study looking at anti-Semitism on Twitter found that posts challenging anti-Semitic tweets are shared far more widely than the anti-Semitic tweets themselves. Most hateful posts were ignored or only shared within a small echo chamber of similar accounts. Perhaps we’re already starting to do the work of the bots ourselves.

It’s worth remembering that we’ve had thousands of years to hone our person-to-person interactions, but only 20 years of social media. “Offline, we have all these cues from facial expressions to body language to pitch,” Danescu-Niculescu-Mizil says. “Online we discuss things only through text. I think we shouldn’t be surprised that we’re having so much difficulty in finding the right way to discuss and cooperate online.”

As our online behaviour develops, we may well introduce subtle signals, digital equivalents of facial cues, to help smooth online discussions. In the meantime, the advice for dealing with online abuse is to stay calm. Don’t retaliate. Block and ignore bullies, or if you feel up to it, tell them to stop. Talk to family or friends about what’s happening and ask them to help you. Take screenshots and report online harassment to the social media service where it’s happening, and if it includes physical threats, report it to the police.

If social media as we know it is going to survive, the companies running these platforms are going to have to keep steering their algorithms, perhaps informed by behavioural science, to encourage cooperation and kindness rather than division and abuse. As users, we too may well learn to adapt to this new communication environment so that civil and productive interaction remains the norm online as it is offline.

“I'm optimistic,” Danescu-Niculescu-Mizil says. “This is just a different game and we have to evolve.”

This article was first published on Mosaic by Wellcome and is republished here under a Creative Commons licence. Full disclosure: Wellcome, the publisher of Mosaic, has shares in Facebook, Alphabet and other social media companies as part of its investment portfolio.

If you liked this story, sign up for the weekly bbc.com features newsletter, called “If You Only Read 6 Things This Week”. A handpicked selection of stories from BBC Future, Earth, Culture, Capital, and Travel, delivered to your inbox every Friday.