The moments that could have accidentally ended humanity

Nasa

NasaIn recent history, a few individuals have made decisions that could, in theory, have unleashed killer aliens or set Earth's atmosphere on fire. What can they tell us about attitudes to the existential risks we face today?

In the late 1960s, Nasa faced a decision that could have shaped the fate of our species. Following the Apollo 11 Moon landings, the three astronauts were waiting to be picked up inside their capsule floating in the Pacific Ocean – and they were hot and uncomfortable. Nasa officials decided to make things more pleasant for their three national heroes. The downside? There was a small possibility of unleashing deadly alien microbes on Earth.

A couple of decades beforehand, a group of scientists and military officials stood at a similar turning point. As they waited to watch the first atomic weapon test, they were aware of a potentially catastrophic outcome. There was a chance that their experiments might accidentally ignite the atmosphere and destroy all life on the planet.

At a handful of moments in the past century, a few rare groups of people have held the world's fate in their hands, responsible for the tiny-but-real possibility of causing total catastrophe. Not just the end of their own lives, but the end of everything.

So, what happened that led to these decisions? And what can they tell us about attitudes to the kinds of risks and crises we face today?

Read more:

When humanity first made plans to send probes and people into space in the mid-20th Century, the issue of contamination came up.

Firstly, there was the fear of "forward" contamination – the possibility that Earth-based life might accidentally hitch a ride into the cosmos. Spacecraft needed to be sterilised and carefully packaged before launch. If microbes snuck onboard, it would confuse any attempts to detect alien life. And if there were extra-terrestrial organisms out there, we might end up inadvertently killing them with Earth-based bacteria or viruses, like the fate of the aliens at the end of War of the Worlds. These concerns matter just as much today as they did back in the Space Race era.

Getty Images

Getty ImagesA second concern was "back" contamination. This was the idea that astronauts, rockets or probes returning to Earth might bring back life that could prove catastrophic, either by outcompeting Earth organisms or something far worse, like consuming all our oxygen.

Back contamination was a fear that Nasa needed to take seriously during the planning of the Apollo missions to the Moon. What if the astronauts brought back something dangerous? At the time, the probability was not considered high – few thought that the Moon was likely to harbour life – but still, the scenario had to be explored, because the consequences were so severe. "Maybe it's sure to 99% that Apollo 11 will not bring back lunar organisms," said one influential scientist at the time, "but even that 1% of uncertainty is too large to be complacent about."

Nasa put several quarantine measures in place – in some cases, a little reluctantly. Concerned officials from the US Public Health Service argued for stricter measures than initially planned, twisting the space agency's arm by pointing out that they had the power to refuse border entry to contaminated astronauts. After congressional hearings, Nasa agreed to install a costly quarantine facility on the ship that would pick up the men from their splashdown in the Pacific Ocean. It was also agreed that the lunar explorers would then spend three weeks in isolation before they could hug their families or shake the hand of the president.

However, there was a major gap in the quarantine procedure, according to the law scholar Jonathan Wiener of Duke University, who writes about the episode in a paper about misperceptions of catastrophic risk.

When the astronauts splashed down, the original protocol stated that they should stay inside the spacecraft. But Nasa had second thoughts after concerns were raised about the astronauts' wellbeing while waiting inside the hot, stuffy space, buffeted by waves. Officials decided instead to open the door, and retrieve the men by raft and helicopter (see picture at the top of this article). While they wore biocontamination suits and entered the quarantine facility on the ship, as soon as the capsule was opened at sea, the air inside flooded out.

Fortunately, the Apollo 11 mission brought no deadly alien life back to Earth. But if it had, that decision to prioritise the short-term comfort of the men could have released it into the ocean during that brief window.

Nuclear annihilation

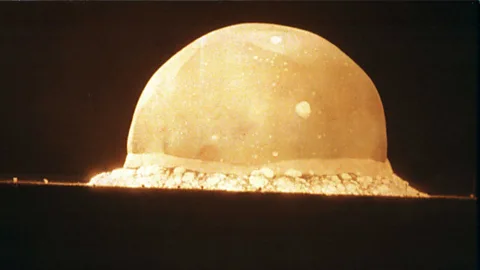

Twenty-four years earlier, scientists and officials within the US government stood at another turning point that involved a small-but-potentially-disastrous risk. Before the first atomic weapons test in 1945, scientists at the Manhattan Project performed calculations that pointed to a chilling possibility. In one scenario they plotted out, the heat from the fission explosion would be so great that it could trigger runaway fusion. In other words, the test might accidentally set the atmosphere on fire and burn away the oceans, destroying most of the life on Earth.

Subsequent studies suggested that it was most likely impossible, but right up until the day of the test, the scientists checked and re-checked their analysis. The day of the Trinity test finally came, and officials decided to go ahead.

Getty Images

Getty ImagesWhen the flash was longer and brighter than expected, at least one member of the team watching it thought that the worst had happened. One of those was the president of Harvard University whose initial awe rapidly turned to fear. "Not only did [he] have no confidence the bomb would work, but when it did he believed they had botched it with disastrous consequences, and that he was witnessing, as he put it, 'the end of the world'," his granddaughter Jennet Conant told the Washington Post after writing a book profiling the scientists of the project.

For the philosopher Toby Ord at Oxford University, that moment was a significant point in human history. He dates the specific time and date of the Trinity test – 05:29 on 16 July 1945 – as the beginning of a new era for humanity, marked by a step-change in our abilities to destroy ourselves. "Suddenly we were unleashing so much energy that we were creating temperatures unprecedented in Earth's entire history," Ord writes in his book The Precipice. Despite the rigour of the Manhattan scientists, the calculations were never subjected to the peer review of a disinterested party, he points out, and there also was no evidence that any elected representative was told about the risk, let alone any other governments. The scientists and military leaders went ahead on their own.

Ord also highlights that, in 1954, the scientists got a calculation staggeringly wrong in another nuclear test: instead of an expected 6 megatonne explosion, they got 15. "Of the two major thermonuclear calculations made that summer… they got one right and one wrong. It would be a mistake to conclude from this that the subjective risk of igniting the atmosphere was as high as 50%. But it was certainly not a level of reliability on which to risk our future."

A vulnerable world

From our enlightened position in the 21st Century, it would be easy to judge these decisions as specific to their time. Scientific knowledge about contamination and life in the Solar System is so much more advanced, and the war between the Allies and the Nazis is long past. Nowadays, no-one would take risks like that again, right?

Sadly not. Whether by accident or otherwise, the possibility of catastrophe is, if anything, greater now than it was back then.

Getty Images

Getty ImagesAdmittedly, alien annihilation is not the biggest risk the world faces. Still, while there may be "planetary protection" policies and labs to guard against alien back contamination, it's an open question how well these regulations and procedures will apply to private ventures that visit other planets and moons in the Solar System. (Adding to the alien catastrophe threat, broadcasting our presence into the galaxy may risk a potentially disastrous meeting with aliens, especially if they were more advanced. History suggests that bad things tend to happen to populations that encounter more technologically proficient cultures – look at the fate of indigenous people meeting European settlers.)

More concerning is the threat of nuclear weapons. A burning atmosphere may be impossible, but a nuclear winter akin to the climatic change that helped to kill off the dinosaurs is not. In WWII, atomic arsenals were not abundant or powerful enough to trigger this disaster, but now they are.

Ord estimates that the risk of human extinction in the 20th Century was around one in 100. But he believes it's higher now. On top of the natural existential risks that were always there, the potential for a human-made demise has ramped up significantly over the past few decades, he argues. As well as the nuclear threat, the prospect of misaligned artificial intelligence has emerged, carbon emissions have skyrocketed, and we can now meddle with the biology of viruses to make them far more deadly.

We're also rendered more vulnerable by global connectivity, misinformation and political intransigence, as the Covid-19 pandemic has shown. "Given everything I know, I put the risk this century at around one in six – Russian roulette", he writes. "If we do not get our act together, if we continue to let our growth in power outstrip that of wisdom, we should expect this risk to be even higher next century, and each successive century.

Another way that existential risk researchers have characterised this burgeoning danger is by asking you to imagine picking balls out of a giant urn. Each ball represents a new technology, discovery, or invention. The vast majority of them are white, or grey. A white ball represents a good advance for humanity, like the discovery of soap. A grey ball represents a mixed blessing, like social media. Inside the urn, however, there are a handful of black balls. They are exceedingly rare, but pick one out, and you have destroyed humanity.

This is called the "vulnerable world hypothesis", and highlights the problem of preparing for very rare, very dangerous events in our future. So far, we haven't picked out a black ball, but that's most likely to be because they are so uncommon – and our hand has already brushed against one or two as we reached into the urn. In short, we’ve been lucky.

There are many technologies or discoveries that could turn out to be black balls. Some we know about already, but haven't implemented, such as nuclear weapons or bioengineered viruses. Others are known unknowns, such as machine learning or genomic technology. Others are unknown unknowns: we don't even know they are dangerous, because they haven't been conceived of yet.

The tragedy of the uncommons

Why do we fail to treat these catastrophic risks with the gravity they deserve? Wiener has some suggestions. He describes the way that people misperceive extreme catastrophic risks as "tragedies of the uncommons".

You have probably heard of the tragedy of the commons: it describes the way that self-interested individuals mismanage a communal resource. Each person does what's best for themself, but everybody ends up suffering. It underlies climate change, deforestation or overfishing.

Getty Images

Getty ImagesA tragedy of the uncommons is different, explains Wiener. Rather than people mismanaging a shared resource, here people are misperceiving a rare catastrophic risk.

He proposes three reasons why this happens:

The first is the "unavailability" of rare catastrophes. Recent, salient events are easier to bring to mind than events that have never happened. The brain tends to construct the future with a collage of memories about the past. If a risk leads the news – terrorism, for instance – public concern grows, politicians act, tech gets invented, and so on. The special difficulty of foreseeing tragedies of the uncommons, however, is that it is impossible to learn from experience. They never appear in headlines. But once they happen, that's it, game over.

The second reason we misperceive very rare catastrophies is the "numbing" effect of a massive disaster. Psychologists observe that people's concern does not grow linearly with the severity of a catastrophe. Or to put it more bluntly, if you ask people how much they care about all people on Earth dying, it's not seven-and-half billion times more concern than if you told them one person would die. Nor do they account for the lives of future generations lost either. At large numbers, there's some evidence that people's concern even drops relative to their concerns about individual tragedy. In a recent article for BBC Future about the psychology of numbing, the journalist Tiffanie Wen quotes Mother Teresa, who said: “If I look at the mass I will never act. If I look at the one, I will."

Finally, Wiener describes an "underdeterrence" effect that encourages a laissez-faire attitude among those taking the risks, because there is no accountability. If the world ends because of your decisions, then you can't get sued for negligence. Laws and rules have no power to deter species-ending recklessness.

Perhaps the most troubling thing is that a tragedy of the uncommons could happen by accident – whether it's via hubris, stupidity, or neglect.

"All else being equal, not many people would prefer to destroy the world. Even faceless corporations, meddling governments, reckless scientists, and other agents of doom require a world in which to achieve their goals of profit, order, tenure, or other villainies," the AI researcher Eliezer Yudkowsky once wrote. "If our extinction proceeds slowly enough to allow a moment of horrified realisation, the doers of the deed will likely be quite taken aback… if the Earth is destroyed, it will probably be by mistake."

We can be thankful that the Apollo 11 officials and Manhattan scientists were not those horrified individuals. But someday in the future, someone will arrive at another turning point where the fate of the species is theirs to decide. Or perhaps they are already on that road, hurtling towards disaster with their eyes closed. Hopefully, for the sake of humanity, they will make the right choice when their moment comes.

--

Richard Fisher is a senior journalist for BBC Future. Twitter: @rifish

If you liked this story, sign up for the weekly bbc.com features newsletter, called “The Essential List”. A handpicked selection of stories from BBC Future, Culture, Worklife, and Travel, delivered to your inbox every Friday.