Davos 2024: Can – and should – leaders aim to regulate AI directly?

Getty Images

Getty ImagesAt this year's World Economic Forum in Davos, global leaders are asking whether to put guardrails in place for AI itself, or look to regulate its effects once the tech is developed.

Artificial intelligence (AI) is top of mind for many workers who are hopeful about its possibilities, but wary of its future implications.

Earlier this month, researchers for the International Monetary Fund found that AI may affect the work of four in 10 employees worldwide. That number jumps to six in 10 in advanced economies, in industries as diverse as telemarketing and law. Additionally, a just-released report by the World Economic Forum showed half of the economists surveyed believed AI would become "commercially disruptive" in 2024 – up from 42% in 2023.

Business leaders at 2024's World Economic Forum's Annual Meeting in Davos are also prioritising conversations about artificial intelligence. Particularly, they're focused on how to regulate AI tech to make sure it's a force for good in both business and the world at large.

"This is the most powerful technology of our times," Arati Prabhakar, director of the US White House Office of Science and Technology Policy, said at a Davos panel on 17 January. "We see the power of AI as something that must be managed. The risks have to be managed."

The conversation around how, exactly, governance can help manage that risk comes at a crucial inflection point. Alongside AI's emerging benefits has been a more complicated and darker reality: artificial intelligence can have unintended consequences and even nefarious uses. Take, for example, the software that automatically (and unlawfully) turned down job applicants over a certain age, or the AI-powered chatbot that spouted sexist and racist tweets.

Getty Images

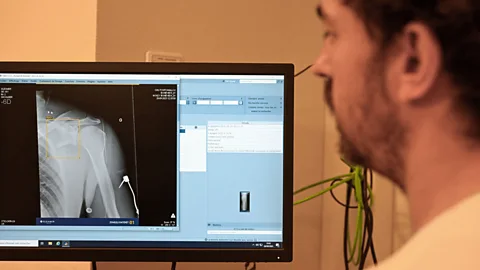

Getty ImagesOne approach on the table at Davos is regulating the way artificial intelligence works from the start.

This could mean putting in place policies that evaluate and audit algorithms. The core idea is to ensure the algorithms themselves aren't misusing data in ways that could lead to unlawful outcomes. In the US, for example, federal agencies have proposed that quality-control assessments be established for algorithms that evaluate a property's collateral value in mortgage applications.

Some experts in the industry, however, are concerned that restrictive regulation of the technology at its origin could stifle innovation and development.

As Andrew Ng, founder of the AI education company Deeplearning.AI and an adjunct computer science professor at Stanford University, put it in a recent interview with the World Economic Forum, "Regulations that impose burdensome requirements on open-source software or on AI technology development can hamper innovation, have anti-competitive effects that favour big tech, and slow our ability to bring benefits to everyone."

Other leaders think it may be impractical to even attempt to directly govern artificial intelligence technology in the first place. Instead, they argue the regulation should take aim at the effects of AI once it's developed.

"Artificial intelligence by the name is not something that you can actually govern," Khalfan Belhoul, chief executive officer of the Dubai Future Foundation, told the World Economic Forum on a recent episode of Radio Davos. "You can govern the sub-effects or the sectors that artificial intelligence can affect. And if you take them on a case-by-case basis, this is the best way to actually create some kind of a policy."

In some ways, this approach is already in action.

"There are a wide variety of laws in place around the world that were not necessarily written for AI, but they absolutely apply to AI. Privacy laws. Cybersecurity rules. Digital safety. Child protection. Consumer protection. Competition law," Brad Smith, vice-chair and president of Microsoft, said at Davos. "And now you have a new set of AI-specific rules and laws. And I do think there's more similarity than most people assume."

But others say there should be some standard set of rules by which to regulate AI technologies specifically.

Indeed, points out Wendell Wallach, senior scholar at the Carnegie Council for Ethics in International Affairs, where he is co-director of the AI and Equality Initiative, and author of the book Dangerous Master: How to keep technology from slipping beyond our control, some industries already have a number of regulations in place that also should regulate AI.

In terms of governing outcomes, regulations already exist – in some industries more than others. "Healthcare is pretty well regulated already, at least in the United States and Europe," says Wallach. "In a sense, many of the AI applications are going to be regulated by what already exists. So, the question is, what additional [regulations] do you need? You probably need different forms of testing and compliance in some areas."

For example, in the US, healthcare regulations hold that a physician has a duty of care; if they misdiagnose a patient, they could be liable for medical malpractice. If a doctor relies on AI to help diagnose patients and gets a diagnosis wrong, they could still be liable (although, experts note, there's the issue of plausible deniability: whether it will become common to blame the AI for making the mistake). But that means governing the outcome – the misdiagnosis.

And many experts think even that doesn't go far enough. There need to be more safety measures in place to regulate the processes itself: quality-control assessments of the underlying data that made that misdiagnosis, for example.

"There will have to be different ways of demonstrating whether an AI is being implemented in a responsible way. And the question of how do you implement tests? How do you benchmark them?" said Josephine Teo, Singapore minister for communications and information, at a Davos panel. "These kinds of things are still very nascent. No one has answers just yet."

Overall, experts say, the key goal is to ensure that AI is used for good – whatever the approach.

"If you think about the work that's ahead of us – to deal with the climate crisis, to lift people's health and welfare, to make sure our kids get educated, and that people in their working lives can train for new skills… it's hard to see how we're going to do them without the power of AI," said Prabhakar. "In the American approach, we've always thought about doing this work of regulation as a means to that end – not just to protect rights, which is completely necessary not only to protect national security, but also to achieve these great aspirations."