Terrorists 'certain' to get killer robots, says defence giant

Getty Images

Getty ImagesRogue states and terrorists will get their hands on lethal artificial intelligence "in the very near future", a House of Lords committee has been told.

Alvin Wilby, vice-president of research at French defence giant Thales, which supplies reconnaissance drones to the British Army, said the "genie is out of the bottle" with smart technology.

And he raised the prospect of attacks by "swarms" of small drones that move around and select targets with only limited input from humans.

"The technological challenge of scaling it up to swarms and things like that doesn't need any inventive step," he told the Lords Artificial Intelligence committee.

"It's just a question of time and scale and I think that's an absolute certainty that we should worry about."

The US and Chinese military are testing swarming drones - dozens of cheap unmanned aircraft that can be used to overwhelm enemy targets or defend humans from attack.

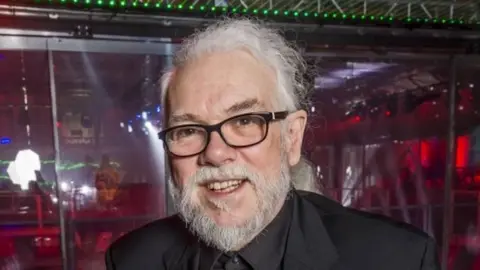

Noel Sharkey, emeritus professor of artificial intelligence and robotics at University of Sheffield, said he feared "very bad copies" of such weapons - without safeguards built-in to prevent indiscriminate killing - would fall into the hands of terrorist groups such as so-called Islamic State.

This was as big a concern as "authoritarian dictators getting a hold of these, who won't be held back by their soldiers not wanting to kill the population," he told the Lords Artificial Intelligence committee.

He said IS was already using drones as offensive weapons, although they were currently remote-controlled by human operators.

But the "arms race" in battlefield artificial intelligence meant smart drones and other systems that roamed around firing at will could soon be a reality.

"I don't want to live in a world where war can happen in a few seconds accidentally and a lot of people die before anybody stops it", said Prof Sharkey, who is a spokesman for the Campaign to Stop Killer Robots.

The only way to prevent this new arms race, he argued, was to "put new international restraints on it", something he was promoting at the United Nations as a member of the International Committee for Robot Arms Control.

But Prof Wilby, whose company markets technology to combat drone attacks, said such a ban would be "misguided" and difficult to enforce.

THOMAS COEX

THOMAS COEXHe said there was already an international law of armed conflict, which was designed to ensure armed forces "use the minimum force necessary to achieve your objective, while creating the minimum risk of unintended consequences, civilian losses".

The Lords committee, which is investigating the impact of artificial intelligence on business and society, was told that developments in AI were being driven by the private sector, in contrast to previous eras, when the military led the way in cutting edge technology. And this meant that it was more difficult to stop it falling into the wrong hands.

Britain's armed forces do not use AI in offensive weapons, the committee was told, and the Ministry of Defence has said it has no intention of developing fully autonomous systems.

But critics, such as Prof Sharkey, say the UK needs to spell out its commitment to banning AI weapons in law.