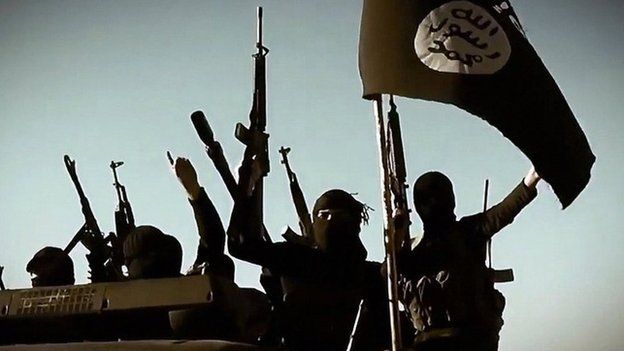

Theresa May warns tech firms over terror content

EPA

EPATechnology companies must go "further and faster" in removing extremist content, Theresa May has told the United Nations general assembly.

The prime minister will also host a meeting with other world leaders and Facebook, Microsoft and Twitter.

She will challenge social networks and search engines to find fixes to take down terrorist material in two hours.

Tech giant Google said firms were doing their part but could not do it alone - governments and users needed to help.

The prime minister has repeatedly called for an end to the "safe spaces" she says terrorists enjoy online.

Ministers have called for limits to end-to-end encryption, which stops messages being read by third parties if they are intercepted, and measures to curb the spread of material on social media.

At the general assembly, the prime minister hailed progress made by tech companies since the establishment in June of an industry forum to counter terrorism.

But she urged them to go "further and faster" in developing artificial intelligence solutions to automatically reduce the period in which terror propaganda remains available, and eventually prevent it appearing at all.

"This is a major step in reclaiming the internet from those who would use it to do us harm," she said.

Together, the UK, France and Italy will call for a target of one to two hours to take down terrorist content wherever it appears.

Internet companies will be given a month to show they are taking the problem seriously, with ministers at a G7 meeting on 20 October due to decide whether enough progress has been made.

Kent Walker, general counsel for Google, who is representing tech firms at Mrs May's meeting, said they would not be able to "do it alone".

"Machine-learning has improved but we are not all the way there yet," he told BBC Radio 4's Today programme, in an exclusive interview.

"We need people and we need feedback from trusted government sources and from our users to identify and remove some of the most problematic content out there."

Asked about carrying bomb-making instructions on sites, he said: "Whenever we can locate this material, we are removing it.

"The challenge is once it's removed, many people re-post it or there are copies of it across the web.

"And so the challenge of identifying it and identifying the difference between bomb-making instructions and things that might look similar that might be perfectly legal - might be documentary or scientific in nature - is a real challenge."

A Downing Street source said: "These companies have some of the best brains in the world.

"They should really be focusing on what matters, which is stopping the spread of terrorism and violence."

Technology companies defended their handling of extremist content after criticism from ministers following the London Bridge terror attack in June.

Google said it had already spent hundreds of millions of pounds on tackling the problem.

Facebook and Twitter said they were working hard to rid their networks of terrorist activity and support.

YouTube told the BBC that it received 200,000 reports of inappropriate content a day, but managed to review 98% of them within 24 hours.

Addressing the UN General Assembly, Mrs May also said terrorists would never win, but that "defiance alone is not enough".

"Ultimately it is not just the terrorists themselves who we need to defeat. It is the extremist ideologies that fuel them. It is the ideologies that preach hatred, sow division and undermine our common humanity," she said.

'Mystified'

A new report out on Tuesday found that online jihadist propaganda attracts more clicks in the UK than in any other country in Europe.

The study by the centre-right think tank, Policy Exchange, suggested the UK public would support new laws criminalising reading content that glorifies terror.

AFP

AFPGoogle said it will give £1m to fund counter-terrorism projects in the UK, part of a $5m (£3.7m) global commitment.

The search giant has faced criticism about how it is addressing such content, particularly on YouTube.

The funding will be handed out in partnership with UK-based counter-extremist organisation the Institute for Strategic Dialogue (ISD).

An independent advisory board will be accepting the first round of applications in November, with grants of between £2,000 and £200,000 awarded to successful proposals.

ISD chief executive Sasha Havlicek said: "We are eager to work with a wide range of innovators on developing their ideas in the coming months."

'International consensus'

A spokesman for the Global Internet Forum to Combat Terrorism, which is formed of tech companies, said combating the spread of extremist material online required responses from government, civil society and the private sector.

"Together, we are committed to doing everything in our power to ensure that our platforms are not used to distribute terrorist content," said the spokesman.

Brian Lord, a former deputy director for Intelligence and Cyber Operations at UK intelligence monitoring service GCHQ, said the UN was "probably the best place" to raise the matter as there was a need for "an international consensus" over the balance between free speech and countering extremism.

He told BBC Radio 4's Today programme: "You can use a sledgehammer to crack a nut and so, actually, one can say: well just take a whole swathe of information off the internet, because somewhere in there will be the bad stuff we don't want people to see.

"But then that counters the availability of information," adding that what is seen as "free speech" in one country might be seen as something which should be taken down in another.