Inside the secret world of trading nudes

BBC

BBCWomen are facing threats and blackmail from a mob of anonymous strangers after their personal details, intimate photos and videos were shared on the social media platform Reddit. The BBC has unmasked the man behind one of the groups, thanks to a second-hand cigarette lighter.

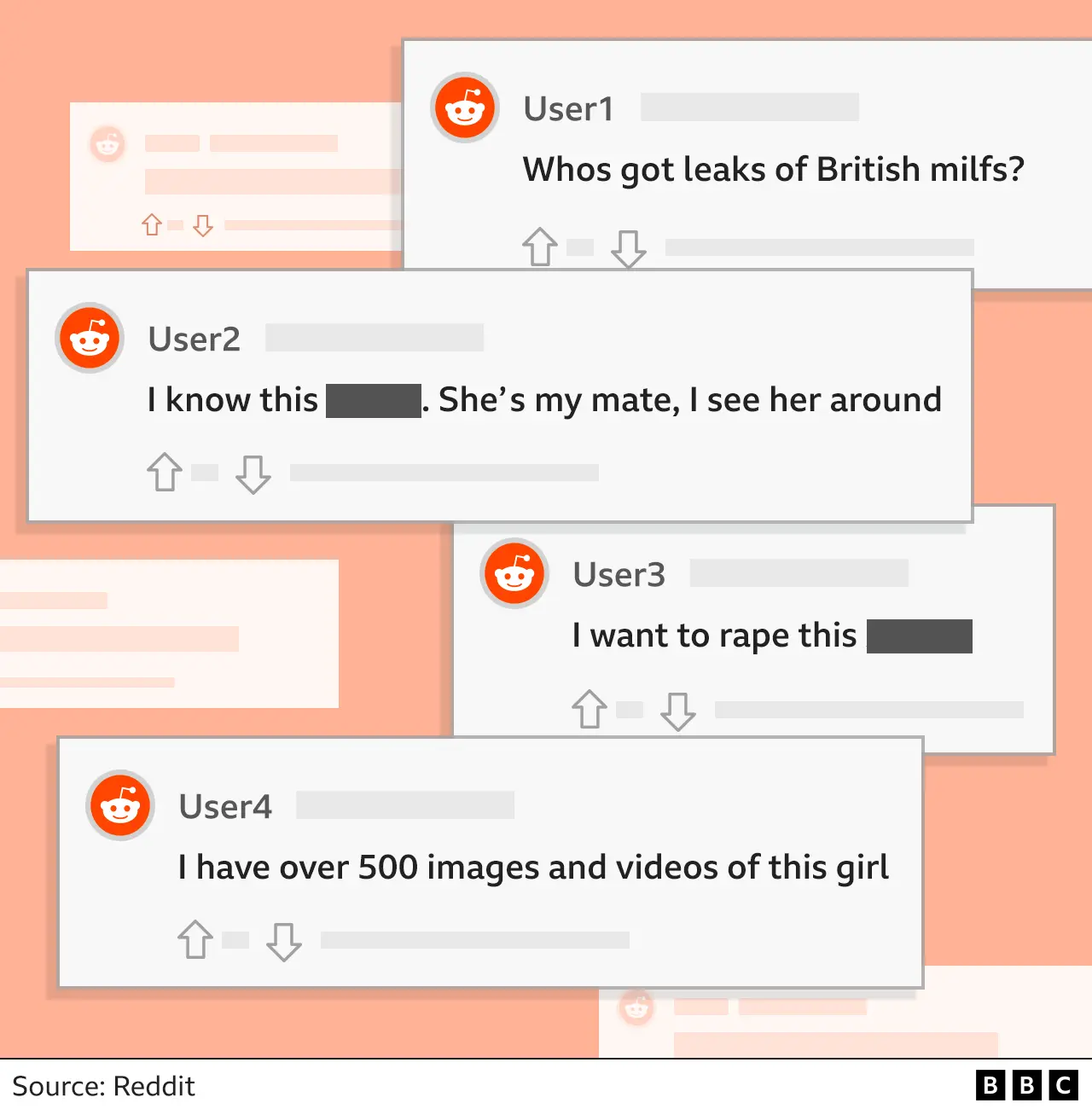

"£5 for her nudes, DM me."

"I've got some of her vids looking to trade."

"What are we gonna do to her?"

I felt sick as I scrolled through the images and comments online.

There were thousands of photographs. A seemingly endless stream of naked or partially dressed women. Underneath, men were posting vicious commentary about the women, including rape threats. Much of what I saw was too explicit to share here.

A tip-off from a friend had brought me to these images. One of her pictures had been lifted from Instagram and posted on Reddit. It was not a nude but was still accompanied by sexual and degrading language. She was concerned for herself and other women.

What I found was a marketplace. Hundreds of anonymous profiles were dedicated to sharing, trading and selling explicit images - and it all appeared to be without the permission of the women pictured.

It seemed like a new evolution of so-called revenge porn, where private sexual material is published online without consent, often by embittered ex-partners.

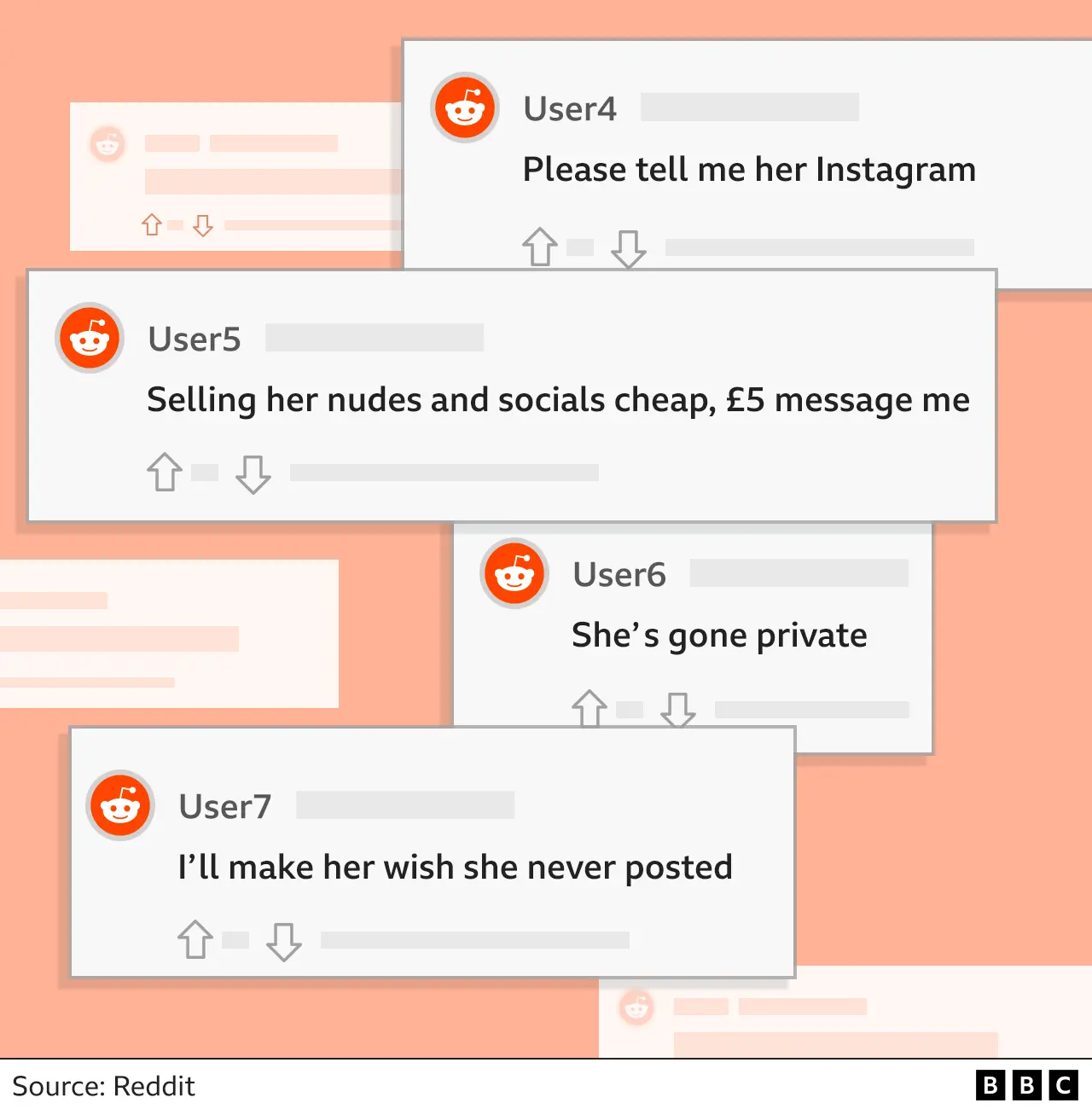

Not only were these intimate images being shared for an audience of thousands, but men - lurking behind the mask of anonymity - were teaming up to expose the real-life identities of these women, a practice known as doxing.

Addresses, phone numbers and social media handles were being swapped online - the women then being targeted with lurid sexual comments, threats and blackmail.

It felt like I had stumbled into a very dark corner of the internet, but this was all happening on a major social media platform.

Reddit brands itself as "the front page of the internet". It has built an audience of about 50 million daily users - roughly four million in the UK - by letting people set up and run forums, known as "subreddits", dedicated to all kinds of interests. Most subreddits are harmless, but Reddit has a history of hosting controversial sexual content.

In 2014, a huge cache of private images of celebrities was shared on the site, and four years later Reddit shut down a group which was using "deepfake" technology - a kind of artificial intelligence - to superimpose celebrities into porn videos.

Responding to these controversies, the US-based company introduced stricter rules and strengthened its ban on posting or threatening to post intimate or sexually explicit media of people without their consent.

I wanted to understand how women's intimate photographs were still being shared on Reddit and what it was like for those affected.

Then I wanted to find out who was behind it.

I could see that Reddit's ban wasn't working.

We found dozens of subreddits dedicated to sharing intimate images of women from all over the UK.

The first one I looked at was focused on South Asian women and had more than 20,000 users, most of whom seemed to be men from the same community, with comments in English, Hindi, Urdu and Punjabi. Some of the women I recognised because they had large social media followings. A few I even knew personally.

There were more than 15,000 images. We looked at a thousand of them and found sexually explicit pictures of 150 different women. All were being dehumanised as sexual objects in the comments. I was sure none of the women would have consented to appearing on this forum.

Some, like the one my friend discovered of herself, were images lifted from the women's social media and weren't explicit. But they were accompanied by degrading comments and sometimes requests to hack victims' phones and computers for nudes.

One woman we contacted says she now gets intrusive, sexual messages on social media "every day" after the group posted an image of her in a crop top from Instagram, alongside comments about raping her.

The men on the subreddit were also sharing and selling naked photos of the women. These images looked like selfies which had been sent between partners and were not meant for public consumption.

There were also videos - even more graphic - where it appeared as though the women had been secretly filmed during sex.

'I will find you'

One thread of messages featured images of a naked woman giving oral sex.

"Anyone got any vids of this [expletive]?" an anonymous user asked, using a derogatory name for her.

"I have her whole folder for £5, snap me," another said.

"What's her socials," asked a third.

Ayesha - not her real name - discovered videos of her were being shared on the subreddit last year. She believes she had been secretly filmed by an ex-partner.

She didn't just have to deal with the violation of her trust, she also faced a wave of harassment and threats on social media when her personal details were posted on the forum.

"If you don't have sex with me, I'll send it to your parents. I will come and find you… If you don't agree to having sex with me, I will rape you." Her harassers tried to blackmail her for more images as well.

"Being a Pakistani girl, it's not right in our community for us to even get sexual before marriage or anything like that - that's not acceptable," she says.

Ayesha stopped socialising or even leaving the house, and eventually tried to take her own life. After her suicide attempt, she had to tell her parents what had happened. Her mother and father both fell into depression, she says.

"I felt so ashamed of everything that was going on and that I'd put them in this situation," she says.

Ayesha contacted Reddit several times. On one occasion, a video was deleted almost immediately but it took four months to remove another. And it didn't end there. The deleted content had already been shared on other social media sites and eventually appeared back on the original subreddit a month later.

The subreddit that shamed and harassed Ayesha was set up and moderated by a user called Zippomad - a name that would eventually provide the clue to tracking him down.

As moderator, Zippomad was supposed to make sure his subreddit discussion group followed Reddit's rules. But he did the opposite.

Since first tracking his subreddit, I've seen him create new versions of it three times - after each previous version was shut down by Reddit due to complaints. Each new incarnation used a variation of the same name, which includes a racial slur too offensive to repeat. Each one was filled with the same material - and each had thousands of active users.

The trade in nudes has become widespread enough for experts in online abuse to give it a name: collector culture.

Clare McGlynn, a law professor at the University of Durham who's an expert on this kind of online abuse, says: "This is not a phenomenon of perverts or weirdos or other oddballs who are doing this. There are too many of them, and it's tens of thousands of men."

Trading images takes place in small, private chat groups in messaging apps as well as websites where tens of thousands of men gather, Prof McGlynn says.

She says many of the men involved gain status in these communities by building up large collections of non-consensual images. This obsessive hoarding can make it hard to stamp out, as Ayesha found out when deleted videos were simply reposted from other collections.

Monika Plaha investigates the disturbing online trade in sexually explicit images and video of women, often taken and posted on social media without their consent.

Watch on BBC iPlayer (UK Only)

Seven women who've tried to get their images removed from Reddit told me they didn't feel the company was doing enough to help. Four said the material was never removed by Reddit and some had to wait eight months before content was deleted.

Reddit has told us it removed just over 88,000 non-consensual sexual images last year and says it takes the issue "extremely seriously".

It says it uses automated tools and has a team of staff to find and remove intimate images published without permission. It adds that it regularly takes action, including closing down forums.

"We know we have more work to do to prevent, detect, and action this content even more quickly and accurately, and we are investing now in our teams, tools, and processes to achieve this goal," a company spokesperson said.

Like tech companies, UK law is struggling to protect women from having their private images shared online.

When Georgie was contacted by a stranger and told explicit images of her were being shared on the internet, she went to the police. She knew that only one person should have had access to them.

"I can't even compute how many people might have already seen them? And there is no way of stopping more people seeing them. In this moment, right now, people might be looking at them," she says.

Her ex-partner texted her to admit sharing the images, but she says he told her "he didn't mean to hurt or embarrass me".

That part of his confession turned out to be a legal loophole. Existing legislation against revenge porn across the UK requires proof that the person sharing photos without permission is doing it to cause distress to the victim. The Law Commission, an independent advisory body to the government, has recommended removing the requirement to prove intent to cause harm. But the Online Safety Bill currently progressing through Parliament does not include that change.

I wanted to track down Zippomad, the Reddit user who created the forum targeting South Asian women - including Ayesha. As I looked through his history of comments on the site, there was no real name, email address or pictures to be found. Only his username provided a clue as to who he was - he collected Zippo lighters and had one for sale. So I got in touch using a fake account and offered to buy it.

He agreed to set up a meeting and our undercover reporter finally came face-to-face with the man who had created the forum where the privacy of so many women had been violated.

His name is Himesh Shingadia. He's university-educated and works as a manager at a large company. It wasn't who I expected to find at all.

After Panorama contacted him, Mr Shingadia deleted his subreddit. In a statement, he says the group had been intended to "appreciate South Asian women". Due to the high number of users, he says he found it impossible to moderate the forum.

He says he never shared anyone's private details or traded images himself and says he helped to remove some sexually explicit material when asked to by women.

"Zippomad is deeply embarrassed and ashamed of his actions, this does not reflect his real personality," the statement says.

Reddit has also removed the other, similar groups that we highlighted to the company.

It means almost a thousand women have, at last, had their images taken down - but that's little comfort after the pain of unwanted exposure.

Tech companies and legislators will need to make changes to prevent more women from being exploited by this trade.

As Georgie says about the ex-partner who shared her images: "I don't want to punish him. I want him to never do it again."

If you have been affected by any of the issues raised, help and advice can be found here