WhatsApp: We won't lower security for any government

Getty Images

Getty ImagesThe boss of WhatsApp says it will not "lower the security" of its messenger service.

If asked by the government to weaken encryption, it would be "very foolish" to accept, Will Cathcart told the BBC.

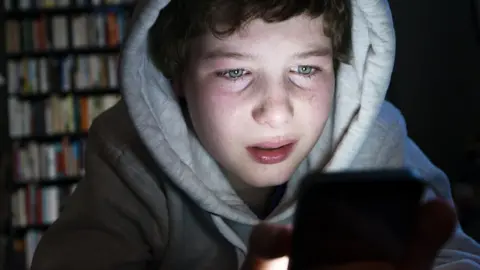

Government plans to detect child sex-abuse images include the possible scanning of private messages.

The NSPCC has criticised WhatsApp's position, saying that direct messaging is "the front line" of child sexual abuse.

The government says tech firms need to tackle child-abuse material online. Its proposals are part of the Online Safety Bill, which has been delayed until the autumn.

"They shouldn't ignore the clear risk that end-to-end encryption could blind them to this content and hamper efforts to catch the perpetrators," said a government spokesperson.

"We continue to work with the tech sector to support the development of innovative technologies that protect public safety without compromising on privacy."

Getty Images

Getty ImagesEnd-to-end encryption (E2EE) provides the most robust level of security, because - by design - only the intended recipient holds the key to decrypt the message, which is essential for private communication.

The technology underpins the online exchanges on apps including WhatsApp and Signal and - optionally - on Facebook messenger and Telegram.

Only the sender and receiver can read those messages - not law enforcement or the technology giants.

The conundrum currently facing the technology community is the UK government's pledge to support the development of tools which could detect illegal pictures within or around an E2EE environment, while respecting user privacy.

Experts have questioned whether it is possible to achieve - and most conclude client-side scanning is the only tangible option. But this destroys the fundamentals of E2EE, as messages would no longer be private.

'Business decision'

"Client-side scanning cannot work in practice," Mr Cathcart said.

Because millions of people use WhatsApp to communicate across the world, it needs to maintain the same standards of privacy across every country, he added.

"If we had to lower security for the world, to accommodate the requirement in one country, that...would be very foolish for us to accept, making our product less desirable to 98% of our users because of the requirements from 2%," Mr Cathcart told BBC News.

The EU Commission has said technology companies should "detect, report, block and remove" child sex abuse images from their platforms.

"What's being proposed is that we - either directly or indirectly through software - read everyone's messages," Mr Cathcart said. "I don't think people want that."

'Mass surveillance'

The UK and EU plans echo Apple's effort, last year, to scan photographs on people's iPhones for abusive content before it was uploaded to iCloud. But Apple rolled back the plans after privacy groups claimed the technology giant had created a security backdoor in its software.

Ella Jakubowska, policy adviser at campaign group European Digital Rights, said: "Client-side scanning is almost like putting spyware on every person's phone.

"It also creates a backdoor for malicious actors to have a way in to be able to see your messages."

Dr Monica Horten, policy manager for the campaign organisation Open Rights Group, agreed: "If Apple can't get it right, how can the government?

"Client-side scanning is a form of mass surveillance - it is a deep interference with privacy."

Prof Alan Woodward, of the University of Surrey, told BBC News that scanning could be misused: "Of course, if you say: 'Do you think children should be kept safe?' everyone's going to say 'yes'.

"But if you then say to someone: 'Right, I'm going to put something on your phone that's going to scan every single one of your images and compare it against the database,' then suddenly you start to realise the implications."

'Very effective'

Mr Cathcart said WhatsApp already detected hundreds of thousands of child sex-abuse images.

"There are techniques that are very effective and that have not been adopted by the industry and do not require us sacrificing everyone's security," he said. "We report more than almost any other internet service in the world."

But that claim has angered children's charities.

Getty Images

Getty Images'Front line'

"The reality is that as it stands right now, under this cloak of encryption, they are identifying only a fraction of the levels of abuse that the sister products, Facebook and Instagram, are able to detect," NSPCC head of child safety online policy, Andy Burrows said.

He called direct messaging "the front line" of child sexual abuse.

"Two-thirds of child abuse that is currently identified and taken down is seen and removed in private messaging," Mr Burrows told BBC News.

"It's increasingly clear that it doesn't have to be children's safety and adult privacy that are pitted against each other. We want a discussion about what a balanced settlement can look like."

For the full debate, you can listen to 'The battle over encrypted messaging' on Tech Tent on BBC Sounds.

Follow Shiona McCallum on Twitter @shionamc.