AI emotion-detection software tested on Uyghurs

Reuters

ReutersA camera system that uses AI and facial recognition intended to reveal states of emotion has been tested on Uyghurs in Xinjiang, the BBC has been told.

A software engineer claimed to have installed such systems in police stations in the province.

A human rights advocate who was shown the evidence described it as shocking.

The Chinese embassy in London has not responded directly to the claims but says political and social rights in all ethnic groups are guaranteed.

Xinjiang is home to 12 million ethnic minority Uyghurs, most of whom are Muslim.

Citizens in the province are under daily surveillance. The area is also home to highly controversial "re-education centres", called high security detention camps by human rights groups, where it is estimated that more than a million people have been held.

Beijing has always argued that surveillance is necessary in the region because it says separatists who want to set up their own state have killed hundreds of people in terror attacks.

Getty Images

Getty ImagesThe software engineer agreed to talk to the BBC's Panorama programme under condition of anonymity, because he fears for his safety. The company he worked for is also not being revealed.

But he showed Panorama five photographs of Uyghur detainees who he claimed had had the emotion recognition system tested on them.

"The Chinese government use Uyghurs as test subjects for various experiments just like rats are used in laboratories," he said.

And he outlined his role in installing the cameras in police stations in the province: "We placed the emotion detection camera 3m from the subject. It is similar to a lie detector but far more advanced technology."

He said officers used "restraint chairs" which are widely installed in police stations across China.

"Your wrists are locked in place by metal restraints, and [the] same applies to your ankles."

He provided evidence of how the AI system is trained to detect and analyse even minute changes in facial expressions and skin pores.

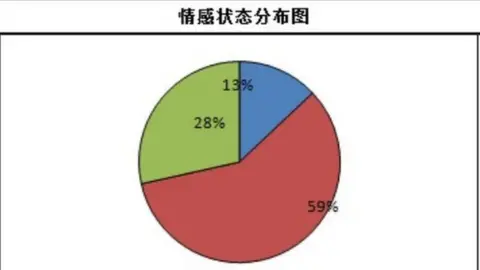

According to his claims, the software creates a pie chart, with the red segment representing a negative or anxious state of mind.

He claimed the software was intended for "pre-judgement without any credible evidence".

The Chinese embassy in London did not respond to questions about the use of emotional recognition software in the province but said: "The political, economic, and social rights and freedom of religious belief in all ethnic groups in Xinjiang are fully guaranteed.

"People live in harmony regardless of their ethnic backgrounds and enjoy a stable and peaceful life with no restriction to personal freedom."

The evidence was shown to Sophie Richardson, China director of Human Rights Watch.

"It is shocking material. It's not just that people are being reduced to a pie chart, it's people who are in highly coercive circumstances, under enormous pressure, being understandably nervous and that's taken as an indication of guilt, and I think, that's deeply problematic."

Suspicious behaviour

According to Darren Byler, from the University of Colorado, Uyghurs routinely have to provide DNA samples to local officials, undergo digital scans and most have to download a government phone app, which gathers data including contact lists and text messages.

"Uyghur life is now about generating data," he said.

"Everyone knows that the smartphone is something you have to carry with you, and if you don't carry it you can be detained, they know that you're being tracked by it. And they feel like there's no escape," he said.

Most of the data is fed into a computer system called the Integrated Joint Operations Platform, which Human Rights Watch claims flags up supposedly suspicious behaviour.

"The system is gathering information about dozens of different kinds of perfectly legal behaviours including things like whether people were going out the back door instead of the front door, whether they were putting gas in a car that didn't belong to them," said Ms Richardson.

"Authorities now place QR codes outside the doors of people's homes so that they can easily know who's supposed to be there and who's not."

Orwellian?

There has long been debate about how closely tied Chinese technology firms are to the state. US-based research group IPVM claims to have uncovered evidence in patents filed by such companies that suggest facial recognition products were specifically designed to identify Uyghur people.

A patent filed in July 2018 by Huawei and the China Academy of Sciences describes a face recognition product that is capable of identifying people on the basis of their ethnicity.

Huawei said in response that it did "not condone the use of technology to discriminate or oppress members of any community" and that it was "independent of government" wherever it operated.

The group has also found a document which appears to suggest the firm was developing technology for a so-called One Person, One File system.

"For each person the government would store their personal information, their political activities, relationships... anything that might give you insight into how that person would behave and what kind of a threat they might pose," said IPVM's Conor Healy.

VCG

VCG"It makes any kind of dissidence potentially impossible and creates true predictability for the government in the behaviour of their citizens. I don't think that [George] Orwell would ever have imagined that a government could be capable of this kind of analysis."

Huawei did not specifically address questions about its involvement in developing technology for the One Person, One File system but said: "Huawei opposes discrimination of all types, including the use of technology to carry out ethnic discrimination.

"As a privately-held company, Huawei is independent of government wherever we operate. We do not condone the use of technology to discriminate against or oppress members of any community."

The Chinese embassy in London said it had "no knowledge" of these programmes.

IPVM also claimed to have found marketing material from Chinese firm Hikvision advertising a Uyghur-detecting AI camera, and a patent for software developed by Dahua, another tech giant, which could also identify Uyghurs.

Dahua said its patent referred to all 56 recognised ethnicities in China and did not deliberately target any one of them.

It added that it provided "products and services that aim to help keep people safe" and complied "with the laws and regulations of every market" in which it operates, including the UK.

Hikvision said the details on its website were incorrect and "uploaded online without appropriate review", adding that it did not sell or have in its product range "a minority recognition function or analytics technology".

Dr Lan Xue, chairman of China's National committee on AI governance, said he was not aware of the patents.

"Outside China there are a lot of those sorts of charges. Many are not accurate and not true," he told the BBC.

"I think that the Xinjiang local government had the responsibility to really protect the Xinjiang people... if technology is used in those contexts, that's quite understandable," he said.

The UK's Chinese embassy had a more robust defence, telling the BBC: "There is no so-called facial recognition technology featuring Uyghur analytics whatsoever."

Daily surveillance

China is estimated to be home to half of the world's almost 800 million surveillance cameras.

It also has a large number of smart cities, such as Chongqing, where AI is built into the foundations of the urban environment.

Chongqing-based investigative journalist Hu Liu told Panorama of his own experience: "Once you leave home and step into the lift, you are captured by a camera. There are cameras everywhere."

"When I leave home to go somewhere, I call a taxi, the taxi company uploads the data to the government. I may then go to a cafe to meet a few friends and the authorities know my location through the camera in the cafe.

"There have been occasions when I have met some friends and soon after someone from the government contacts me. They warned me, 'Don't see that person, don't do this and that.'

"With artificial intelligence we have nowhere to hide," he said.

Find out more about this on Panorama's Are you Scared Yet, Human? - available on iPlayer from 26 May