TikTok failed to ban flagged 'child predator'

BBC

BBCTikTok's pledge to take "immediate action" against child predators has been challenged by a BBC Panorama investigation.

The app says it has a "zero tolerance" policy against grooming behaviours.

But when an account created for the programme - which identified itself as belonging to a 14-year-old girl - reported a male adult for sending sexual messages, TikTok did not ban it.

And it only took such action after Panorama sought an explanation.

The video-sharing platform claimed its moderators did not intervene in the first instance because the child's account had not made it clear the offending posts had been received via TikTok's direct messaging (DM) facility.

"We... have a duty to respect the privacy of our users," it told the BBC, adding that complaints do "not generally trigger a review" of DMs.

However, Panorama's account had in fact selected and submitted each of the seven chat messages involved via the app's own reporting tool.

Child safety experts say parents need to be aware of the risks involved with letting their children use TikTok.

"The thing with TikTok is it's fun, and I think whenever someone is having fun they're not recognising the dangers," said Lorin LaFave, founder of the Breck Foundation.

"[Predators] might be looking to groom a child, to exploit them, to get them to do something that could be harmful to them."

Sex-image message

TikTok does not allow an account to receive or send direct messages if the user registers themselves as being under 16.

But many of its youngest members get round this by lying about their date of birth when they join.

To simulate this, Panorama registered the account with a 16-year-old's birth date.

But in her profile, it stated the owner was a 14-year-old girl in its biography description.

The team recruited a journalism graduate who creates TikToks for an internet search company.

She is 23, but posed as the 14-year-old, putting her pictures through photo-editing software to make her look younger.

Every day she posted videos, copying popular dances to trending tracks.

These featured hashtags including #schoollife and #schooluniform.

Over the period, the account picked up followers including what appeared to be older men.

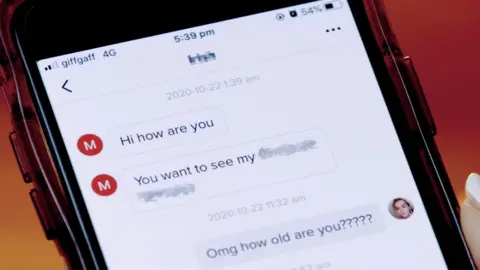

One sent a series of DMs in the early hours of the morning asking if the user wanted to see his penis, describing it in explicit terms.

When she responded, the "girl" asked how old he was and he replied, saying he was 34 years old.

She told him she was 14, to which he replied: "Ohhh you are under age sorry."

He did not send an image or follow-up DMs, but continued to like the account's videos.

All of his messages were sent to TikTok.

After three days, the man's account was still active, at which point Panorama contacted the company.

The following day, TikTok banned the man's account and blocked his smartphone from being able to set up a new one.

A spokesman noted the app offered privacy features to help parents avoid such problems.

"We offer all our users a high level of control over who can see and interact with the content they post," he said.

"These privacy settings can be set either at account level or on a video-by-video basis."

TikTok's moderators also terminated two other men's accounts without need for follow-up prompts.

In both these cases, the men continued to send the "child" private messages even after she had told them "she" was 14.

TikTok safety tips:

If users register they are under-18 when they sign up, TikTok automatically suggests they restrict who sees their videos.

But many youngsters decide not to do this because they want to have a wider audience than just pre-approved friends.

Even so, their parents or guardians can still protect them.

One way to do this is to:

- Go to Settings

- Choose Family Pairing

- Scan a QR barcode to identify which is the adult's device and which the child's

The adults can subsequently limit the types of content the child sees, and restrict who sends DMs or block private chats altogether.

The teens can subsequently unpair the accounts, but doing so will send the adult an alert and give them 48 hours to restore the link before the child can turn off the restrictions.

If parents do not want to install the app themselves, they can alter the settings of the child's app instead.

One option is to:

- Select Digital Wellbeing

- Select Restricted Mode

- Set a password

After doing this, the youngsters should only be able to see suitable videos, although TikTok acknowledges that its filters are not guaranteed to screen out all "inappropriate" content.

A further option is to:

- Choose Privacy

- Switch on Private Account and/or switch off Suggest Your Account To Others

The first option only lets approved users see the child's activity, the second stops their profile being shown to people interested in similar accounts.

Recommended videos

Panorama also spoke to one of the company's former moderators, who had worked in its London office.

The ex-employee explained that predators take advantage of the fact that TikTok's recommendation algorithm is designed to identify what users are interested in and then find them similar content.

"If you're looking at a lot of kids dancing sexually and you interact with that, it's going to give you more kids dancing sexually.

"Maybe it's a predator... they see these kids doing that and that's their way of engaging with these kids."

The interviewee - who asked to remain anonymous - left before TikTok's announcement earlier this year that it would stop using moderation staff based in Beijing.

But prior to this, employees at the firm's Beijing headquarters are claimed to have made key decisions.

"It felt like not very much was being done to protect people," the ex-worker said.

"Beijing were hesitant to suspend accounts or take stronger action on users who were being abusive.

"They would pretty much only ever get a temporary ban of some form, like a week or something.

"Moderators at least on the team I was on did not have the ability to ban accounts. They have to ask Beijing to do that. There were a lot of... clashes with Beijing."

TikTok says none of its content is moderated in China anymore - although it does use a team there to censor a sister-version of the app, Douyin, targeted at Chinese users.

"We have a dedicated and ever-growing expert team of over 10,000 people in 20 countries, covering 57 languages, who review and take action against content and accounts that violate our policies," said a TikTok spokesman.

TikTok added that it also used automated tools to screen content.

And it said agents based in Dublin, San Francisco and Singapore were available to deal with any pressing threats within five minutes "to ensure swift actions is taken".

Viewers in the UK can watch Panorama: Is TikTok Safe? on BBC One on Monday 2 November at 19:35 GMT