Google seeks permission for staff to listen to Assistant recordings

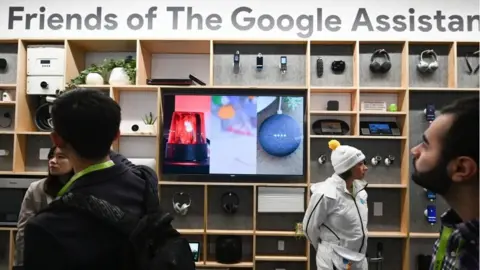

Getty Images

Getty ImagesGoogle has said it will let its human reviewers listen to audio recordings made by its virtual assistant only if users give it fresh permission to do so.

The company said the option had always been opt-in but it had not been explicit enough that people were involved in transcribing the clips.

Amazon and Facebook, by contrast, make users ask if they want to be excluded.

Google's move has been welcomed by digital rights campaigners.

"Companies should do the right thing and make sure people choose to be recorded," Open Rights Group executive director Jim Killock said.

"They shouldn't be forced into checking that every company isn't intruding into their homes and daily conversations."

Google has said about one in 500 of all user audio snippets would be subject to the human checks.

Leaked audio

The issue of technology-company workers listening and transcribing audio recordings made via smart speakers and virtual assistant apps came to the fore in April, when the Bloomberg news agency reported Amazon, Google and Apple were all involved in the practice.

Staff label words and phrases in recordings to help improve voice-recognition software's accuracy.

Although it is common practice to improve machine-learning tools in this way, many customers had been unaware their recordings were being played back and listened to by other humans.

Part of the issue is virtual assistants often switch themselves on without being commanded, meaning users are not always aware of what is being captured.

These included cases of mistakenly recorded business phone calls containing private information, it said, as well as "blazing rows" and "bedroom conversations".

Hamburg's data protection commissioner launched an inquiry into the matter in August, at which point Google revealed it had suspended human reviews across the EU.

Sensitivity setting

In its latest blog, one of Google Assistant's managers apologised it had fallen "short of our high standards in making it easy for you to understand how your data is used".

Nino Tasca said Google would now require existing users who had previously agreed to let their audio be used to improve the Assistant to do so again.

And this time, the settings menu would make it obvious humans would be involved in the process.

Getty Images

Getty ImagesIn addition, he said, Google would soon roll out a feature that would let users adjust how sensitive their devices were to the activation commands.

This would allow consumers to choose whether to make the Assistant less likely to activate itself by accident at the cost of it being more likely to ignore the trigger words in a noisy environment.

In addition, Mr Tasca said, Google would soon "automatically delete the vast majority of audio data associated with your account that's older than a few months".

His post also promises further privacy measures will be implemented but does not commit Google to using only its own staff to carry out the work.

Part of the criticism technology companies have faced is they sometimes employ third-party contract workers, who are allowed to carry out the work at home or at other remote locations, posing challenges to keeping the recordings secure.

"We will continue to work with vendor partners that have gone through our security and privacy review process and audio review will take place on protected machines," a Google spokeswoman told BBC News.

That contrasts to Apple's approach. After it reviewed its rules last month, it said it would allow only its own employees to carry out the work from then on.