Live facial recognition surveillance 'must stop'

UK police and companies must stop using live facial recognition for public surveillance, politicians and campaigners have said.

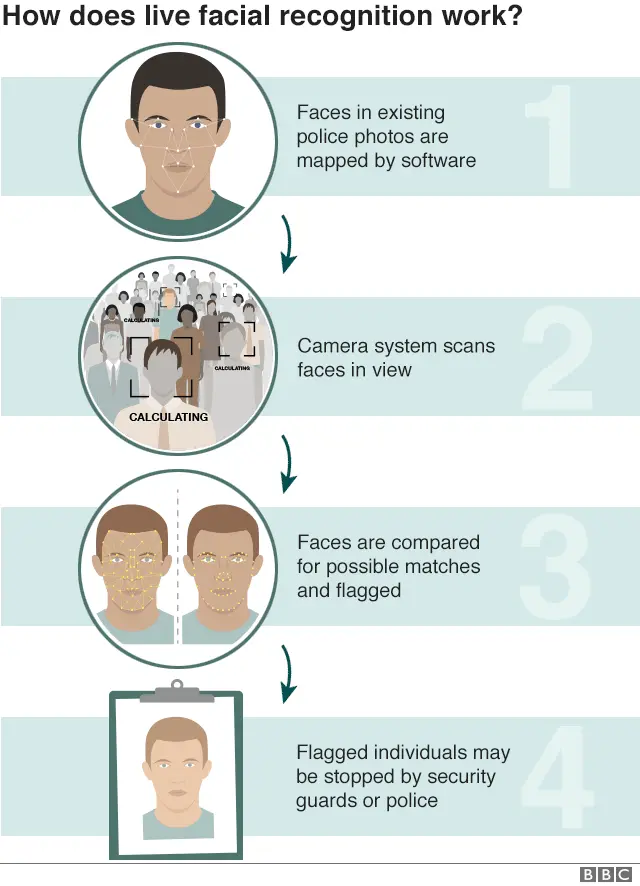

The technology allows faces captured on CCTV to be checked in real time against watch lists, often compiled by police.

Privacy campaigners say it is inaccurate, intrusive and infringes on an individual's right to privacy.

But its makers say it helps protect the public as it can catch people like terror suspects in a way police cannot.

The Home Office said it supported the police "as they trial new technologies to protect the public, including facial recognition, which helps them identify and locate suspects and criminals".

'Surveillance crisis'

A letter, written by privacy campaign group Big Brother Watch, has been signed by more than 18 politicians, including David Davis, Diane Abbott, Jo Swinson and Caroline Lucas. Twenty-five campaign groups including Amnesty International and Liberty, plus academics and barristers also signed.

They argue facial recognition is being adopted in the UK before it has been properly scrutinised by politicians.

The director of Big Brother Watch, Silkie Carlo, told the Victoria Derbyshire programme: "What we're doing is putting this to government to say: 'Please can we open this debate and have this conversation.

"'But for goodness sake, while it is going on, there is now a surveillance crisis on our hands that needs to be stopped urgently'."

Legal action

The Kings Cross estate has recently been at the centre of controversy, when it was revealed its owners were using facial recognition technology without telling the public.

It then emerged both the Metropolitan Police and British Transport Police had supplied the company with images for their database. Both had initially denied involvement.

South Wales Police was taken to the High Court over its trial of the technology, by a man who was caught on camera. The court ruled it was lawful, although that is now being appealed against.

Researchers have raised concerns that some systems are vulnerable to bias, as they are more likely to misidentify women and darker-skinned people.

Areeq Chowdhury, head of the Future Advocacy think tank, said this was due to things like colour contrasts on people of colour and systems being confused by cosmetics, while some systems have not been trained with enough diverse datasets of people from different demographics.

"You could see a situation where you are identifying innocent individuals who are from a particular minority. Which means they'll be questioned by the police even though they're innocent and they may even have their details and picture captured on record, despite having committed no crime," he said.

Intercept terrorists

Digital Barriers, a worldwide supplier of the technology, says facial recognition is an essential tool for counter-terrorism.

Its CEO, Zak Doffman, said: "Imagine I know there is a group of individuals in central London that want to do harm on a massive scale to the public. Would you have public support to use facial recognition to try and intercept that group of individuals before they can do harm? I would suggest almost categorically you would."

He added that he did not support indiscriminate use of the technology.

"I'll give you the opposite example, an individual has been kicked out of the pub for drinking too much on a Saturday night. The pub has taken a photo of that individual, should they then be prevented from getting into that establishment or other establishments because of that incident? I think you'll have very little public consent for that example.

"Unfortunately there's no clarity. There's no regulation that governs either case and that is the challenge."

The UK Surveillance Camera Commissioner, Tony Porter, says there must be a set of strict standards governing how the technology is used, before it is formally adopted by police forces.

"There should be a standard around its siting, efficiency and effectiveness," he explained. "I suppose you might say, 'What is an appropriate force hit-rate that is tolerable against the totality?' There needs to be a lot more assurance to the public that any notion of bias through ethnic background is eradicated."

The Home Office said it welcomed a recent judgment confirming "there is a clear and sufficient legal framework for the use of live facial recognition technology in the UK".

It added the technology had demonstrated the ability to tackle crime and identify criminals in an efficient way that would not otherwise be possible.