Child abuse images being traded via secure apps

PA

PAImages of child sexual abuse and stolen credit card numbers are being openly traded on encrypted apps, a BBC investigation has found.

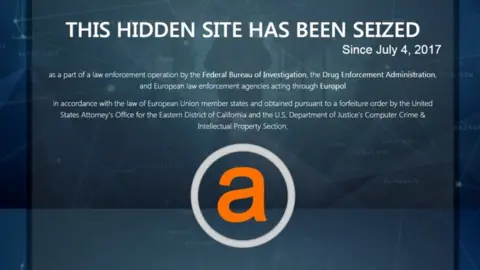

Security experts told Radio 4's File on 4 programme that the encrypted apps were taking over from the dark web as a venue for crime.

The secure messaging apps, including Telegram and Discord, have become popular following successful police operations against criminal markets operating on what is known as the dark web - a network that can only be accessed by special browsers.

The secure apps have both a public and encrypted side.

Often, the public side is listed online so users can search and join groups advertising drugs, stolen financial data and other illegal material.

Once a group is joined, however, messages are protected by peer-to-peer encryption, generally putting them beyond law enforcement's reach, say experts.

Abuse catalogues

The investigators found evidence that paedophiles were using both Telegram and Discord to give people access to abuse material, and that links to Telegram groups were buried in the public comments section of YouTube videos.

These contained code words that would be indexed by search engines and, once clicked on, took people to the closed group.

Researchers confirmed that at least one of these groups contained hundreds of indecent images of children.

There were good reasons that paedophiles hid links on YouTube, said cyber-crime expert Dr Victoria Baines, a former Europol officer and adviser to the UK's Serious and Organised Crime Agency, the National Crime Agency's predecessor.

"YouTube is indexed by Google, which means if you are an 'entry level', for want of a better phrase, viewer of child abuse material you may start Googling," she said.

"And while Google tries to put restrictions on that, [the links] are publicly accessible on the web, so it is a means of getting people who are curious or idly searching into a closed space, where they can access material."

YouTube said it has a zero tolerance approach to child sexual abuse material and has invested heavily in technology, teams and partnerships to tackle the issue.

A spokesperson said if it identified links, imagery or content promoting child abuse, the material is removed and the authorities alerted.

A spokesman for Telegram said it processed reports from users and engaged in "proactive searches" to keep the platform free of abuse, including child abuse and terrorist propaganda.

It said reports about child abuse were usually processed within one hour.

Explicit photos

The BBC investigation also found that paedophiles are exploiting the Discord app, which is popular among young people, who use it to text and chat while gaming online.

It discovered a series of chatrooms openly promoted as suitable for 13 to 17-year-olds, but with very sexual descriptions that aimed to persuade children to hand over explicit photos.

Ariel Ainhoren, head of research at security firm IntSights, said the firm had recently identified a group on Discord in which one user had posted a price list for child abuse imagery. The group was no longer active, but the user had given out an email address for sales queries that was still viewable online.

"The user was offering gigabytes of pornography or paedophile material: nine gigabytes for $50 (£39), 50 gigabytes for $500, and 2.2 terabytes, which is a huge amount, for around $2,500," said Mr Ainhoren.

Nine gigabytes could contain many thousands of images, depending on the file sizes.

The user also said he was selling access to child sexual abuse and rape forums.

File on 4 has passed details of the illegal material revealed by its investigation to the National Crime Agency.

Once told about the groups, Discord said: "The number of these violations makes up a tiny percentage of usage on Discord and the team is committed to improving our policies and processes to make it even smaller.

"Discord's Trust and Safety policy exists to proactively protect the safety of our users - on and off platform - and we have a variety of security methods that help users avoid unwanted or unknown contact.

"As all conversations are opt-in, we urge users to only chat with or accept invitations from individuals they already know."

It said it uses computer, human and community intelligence to spot violations of its rules.

Card crash

The radio investigation also unearthed widespread abuse of the apps to sell stolen payment card data.

One British victim's full name, address, date of birth, password, bank account and credit card details, including the three-digit security code, were published by criminals.

She said it was "very alarming" to see her details, which were being offered up for free as a "taster".

"Someone has a very sophisticated way of hacking into my information without me knowing it", said the woman, who is not being named by the BBC to protect her from potential harm.

Details of the illegal material revealed by the investigation have been passed to the National Crime Agency.

Telegram was asked questions about the way it was being exploited by criminals, and also told it about the trading of the woman's personal information.

US Department of Justice

US Department of JusticeLast year, Theresa May told the Davos meeting of world leaders that small technology platforms can quickly become "…home to criminals and terrorists".

She said: "We have seen that happen with Telegram and we need to see more co-operation from smaller platforms like this."

Security minister Ben Wallace said the government had set up a £1.9bn cyber-security programme to increase the police's capability to infiltrate criminal groups online.

He said the government was set to publish a White Paper looking at whether tech platforms need to have a duty of care towards users, to oblige them to remove illegal material from their platform.

"We are exploring in the online harm White Paper the area of duty of care, and if they don't fulfil that, then one of the things we are exploring is that there will be a regulator involved," he said.

File on 4's Swipe Right for Crime' is on BBC Radio 4 on Tuesday 19 February at 20:00 GMT and available afterwards on BBC Sounds.