Implicit bias: Is everyone racist?

Getty Images

Getty ImagesFew people openly admit to holding racist beliefs but many psychologists claim most of us are nonetheless unintentionally racist. We hold, what are called "implicit biases". So what is implicit bias, how is it measured and what, if anything, can be done about it? David Edmonds has been investigating.

"Your data suggests a slight automatic preference for white people over black people."

That was hardly the result I'd hoped for. So am I racist? A bigot? That would be rather at odds with my self-image. But perhaps it's something I need to own up to?

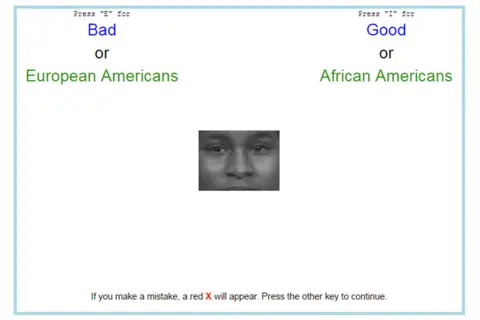

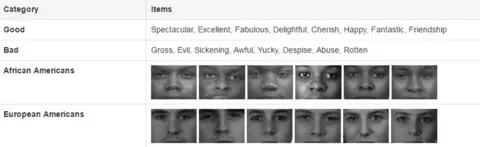

I'd just taken the Implicit Association Test (IAT), which is a way to identify implicit bias. And not just race bias, but also, for example, bias against gay, disabled or obese people.

For those who've never taken an IAT, it works a bit like this - using the race IAT as an example. You're shown words and faces. The words may be positive ones ("terrific", "friendship", "joyous", "celebrate") or negative ("pain", "despise", "dirty", "disaster"). In one part of the process you have to press a key whenever you see either a black face or a bad word, and press another key when you see either a white face or a good word. Then it switches round: one key for a black face and good words. Another for white faces and bad words. That's a lot to keep in your head. And here's the rub. You've got to hit the appropriate key as fast as possible. The computer measures your speed.

You can take the test here.

The idea behind the IAT is that some categories and concepts may be more closely linked in our minds than others. We may find it easier, and therefore quicker, to link black faces with nasty words than white faces with nasty words.

Over the past few decades, measures of explicit bias have been falling rapidly. For example, in Britain in the 1980s about 50% of the population stated that they opposed interracial marriages. That figure had fallen to 15% by 2011. The US has experienced a similarly dramatic shift. Going back to 1958, 94% of Americans said they disapproved of black-white marriage. That had fallen to just 11% by 2013.

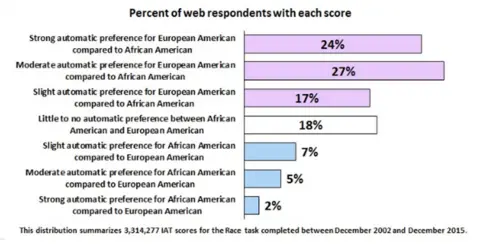

But implicit bias - bias that we harbour unintentionally - is much stickier, much more difficult to eradicate. At least that's the claim. The IAT, first introduced two decades ago as a means of measuring implicit bias, is now used in laboratories all across the world. From Harvard's Project Implicit site alone, it has been taken nearly 18 million times. And there's a pattern. My result was far from unique. On the race test, most people show some kind of pro-white, anti-black bias. They are speedier connecting black faces to bad concepts than white faces. (Black people are not immune to this phenomenon themselves.)

Implicit bias has been used to explain, at least partially, everything from the election of President Donald Trump (implicit bias against his female opponent) and the disproportionate number of unarmed black men who are shot in the US by police. The notion that many of us suffer from various forms of implicit bias has become so commonplace that it was even mentioned by Hillary Clinton in one of her presidential debates with Trump. "I think implicit bias is a problem for everyone," she said.

Getty Images

Getty ImagesTrump responded a week later. "In our debate this week she accuses the entire country - essentially suggesting that everyone - including our police - are basically racist and prejudiced. It's a bad thing she said."

Indeed, it's hard to think of an experiment in social psychology which has had such far-reaching impact. Jules Holroyd, an expert in implicit bias at Sheffield University, traces some of the test's success to its providing an explanation "for why exclusion and discrimination of various forms persist".

"And that explanation," she adds, "is really appealing since it doesn't need to attribute ill-will or animosity to the people who are implicated in such exclusion."

Over the past decade or so, it's become routine for major companies to use implicit association tests in their diversity training. The aim is to demonstrate to staff, particularly those with the power to recruit and promote, that unbeknown to them, and despite their best intentions, they may nonetheless be prejudiced. The diversity training sector is estimated to be worth $8bn each year in the US alone.

There's an obvious business case for eliminating bias. If you can stop your staff behaving irrationally and prejudicially, you can employ and advance the best talent. Implicit bias down = profits up.

Find out more

- Listen to David Edmonds' radio documentary on Implicit Bias on Analysis, on BBC Radio 4 at 20:30 on Monday 5 June

- Or catch up afterwards on the BBC iPlayer

However, pretty much everything about implicit bias is contentious, including very fundamental questions. For example, there is disagreement about whether these states of mind are really unconscious. Some psychologists believe that at some level we are aware of our prejudices.

Then there's the IAT itself. There are two main problems with it. The first is what scientists call replicability. Ideally a study which produces a certain result on a Monday should produce the same result on Tuesday. But, says Greg Mitchell, a law professor at the University of Virginia, the replicability of the IAT is extremely poor. If the test suggests that you have a strong implicit bias against African Americans, then "if you take it even an hour or so later you'll probably get a very different score". One reason for this is that your score seems to be sensitive to circumstances in which you take it. It's possible that your result will depend on whether you take the test before - or after - a hearty lunch.

More fundamentally, there appears to a very tenuous relationship between the IAT and behaviour. That is to say, if your colleague, Person A, does worse in the IAT than another colleague, Person B, it would be far too hasty to conclude that Person A will exhibit more discriminatory behaviour in the workplace. In so far as there is a link between the IAT and behaviour generally, it is shaky.

Defenders of the IAT counter that even a weak correlation between IAT and behaviour matters. Naturally, human behaviour is hugely complex; we are influenced by numerous factors, including the weather, our mood, our blood-sugar levels. But each day there are millions of employment decisions, legal judgements and student assessments. If even a small fraction of these were infected by implicit bias, the numbers affected overall would be significant.

Over the past few years, the pro- and anti-IAT scholars have lobbed various competing research papers at each other. One study looked at black and white patients with cardiovascular symptoms: the puzzle was why doctors often treated black and white patients differently. Researchers found that whether doctors treated races differently was correlated with how they performed on the IAT. Another study presented participants with white and black faces followed by pictures of either a gun or some other object. The task was to identify as quickly as possible whether the object was actually a gun. It turned out that people were more likely to identify a non-gun object as a gun if this had been preceded by a picture of a black person. What's more, this was linked to the IAT: poor IAT performers were prone to make more errors.

But those who are sceptical about the idea of a link between behaviour and the IAT cite other studies. One experiment purported to show that police officers who performed poorly on the IAT were not more likely to shoot unarmed black suspects than unarmed white suspects. And as for the diversity industry, research and opinion differs about whether raising awareness of possible bias helps resolve the problem, or actually makes it worse, by making people more race-conscious. According to Greg Mitchell, "The evidence right now is that these programmes are not having any direct effects on implicit bias or on behaviour."

Getty Images

Getty ImagesIt's a confusing picture. But putting the contentious IAT to one side, many scholars claim that there are other ways to identify implicit bias. "The IAT is not the only paradigm in which we observe unintentional bias, and so even if it did turn out that the IAT is a poor measure of unintentional bias, that doesn't imply that the phenomenon isn't real," says philosopher Sophie Stammers, of Birmingham University. There have been studies, for example, showing employers are more likely to respond positively to CVs with male names rather than female, or with stereotypically white names than black.

Meanwhile, Sarah Jane Leslie, from Princeton, has produced some fascinating research into why some academic disciplines - such as maths, physics and her own area, philosophy - have such a paucity of women. Her explanation is that some disciplines - such as philosophy - emphasise the need for raw brilliance to be successful, while others - such as history - are more likely to stress the importance of dedication and hard work. "Since there are cultural stereotypes that link men more than women with that kind of raw brilliance", Leslie says, "we predicted and indeed found that those disciplines that emphasise brilliance were disciplines in which there were far fewer women." In popular culture, it is hard to think of a female equivalent to Sherlock Holmes, for example, a detective whose astonishing deductions were a product of his singular genius.

How early in our lives do we absorb these cultural stereotypes? Sarah Jane Leslie conducted one study with children. Boys and girls were told about a person who was really clever, and given no clue as to the person's gender. Then they were shown four pictures, two women and two men, and were asked which of these people was the clever person. Aged five, boys and girls were just as likely to pick out a man than a woman.

Getty Images

Getty ImagesWithin a year the culture in which we swim appears to have had an effect. "Aged six," says Leslie, "girls are significantly less likely than boys to think that a member of their own gender can be that really, really smart person."

The claim that most of us suffer from various forms of implicit bias is all of a piece with the explosion of research into the irrationality of our reasoning, decisions and beliefs. We are not the cogent, systematic and logical creatures we might like to assume. The findings of the IAT, and findings on implicit bias more generally, are unquestionably important. But there's still a lot we don't know. We still need a lot more rigorous research into how implicit bias interacts with behaviour, and how it can best be overcome.

Two weeks after taking my first IAT test, I took it again. I again showed a bias, but this time in favour of African-Americans. That's left me more puzzled than ever. I still don't really know whether I'm racist.