Glitch in YouTube's tool for tracking obscene comments

Part of YouTube's system for reporting sexualised comments left on children's videos has not been functioning correctly for more than a year, say volunteer moderators.

They say there could be up to 100,000 predatory accounts leaving indecent comments on videos.

A BBC Trending investigation has discovered a flaw in a tool that enables the public to report abuse.

But YouTube says it reviews the "vast majority" of reports within 24 hours.

It says it has no technical problems in its reporting mechanism and that it takes child abuse extremely seriously. On Wednesday, the company announced new measures to protect children on the site.

YouTube

YouTubeUser reports

YouTube is the world's largest video-sharing site. In addition to algorithms that can automatically block illegal and exploitative videos, it relies on users to report illegal behaviour or content that goes against its rules. The company says it has a zero-tolerance policy against any form of grooming or child endangerment.

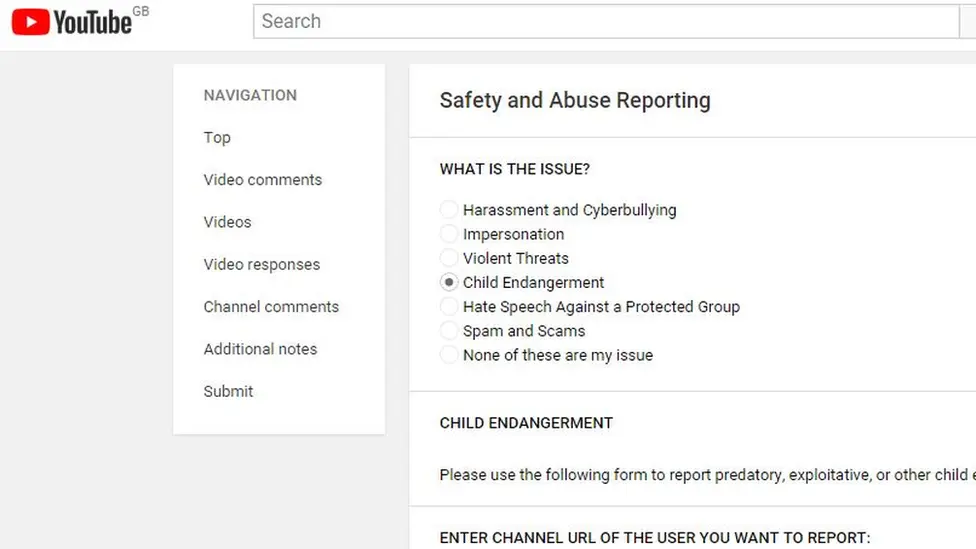

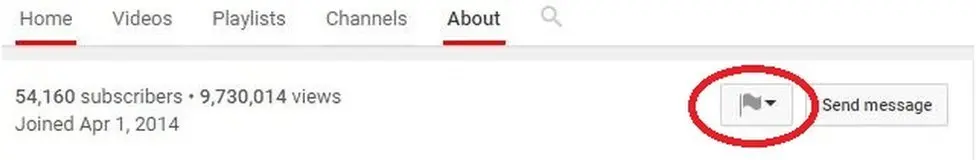

Users can use an online form to report potentially predatory accounts, and they are then asked to include links to relevant videos and comments. The reports then go to moderators - YouTube employees who review the material and have the power to delete it.

However, sources told Trending that after members of the public submitted information on the form, the associated links might be missing from the report. YouTube employees could see that a particular account had been reported, but had no way of knowing which specific comments were being flagged.

YouTube

YouTubeTrusted Flaggers

BBC Trending was informed of the issue by members of YouTube's Trusted Flagger programme - a group that includes individuals, as well as some charities and law enforcement agencies. The programme began in 2012, and those involved have special tools to alert YouTube to potential violations.

The company says reports of violations by Trusted Flaggers are accurate more than 90% of the time. The volunteers are not paid by YouTube, but do receive some perks such as invitations to conferences.

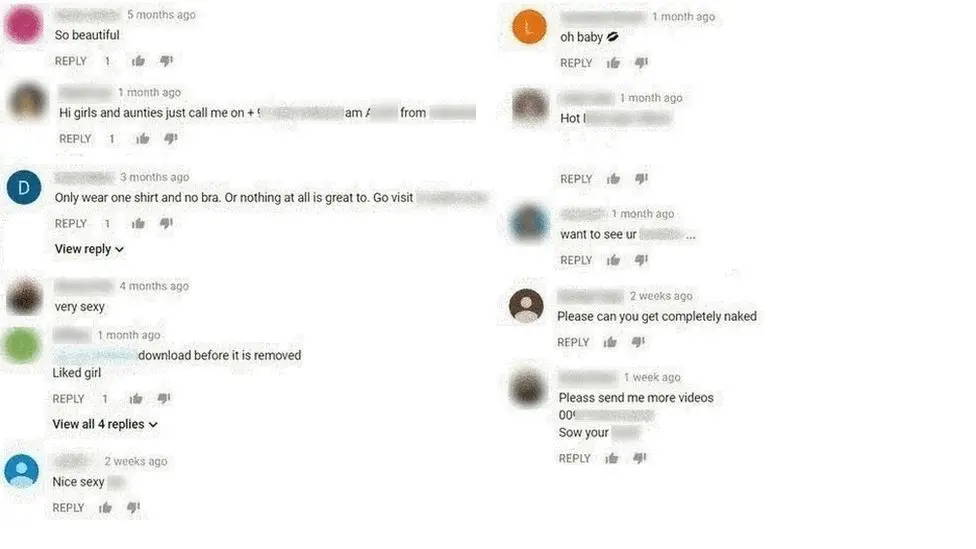

With the help of a small group of Trusted Flaggers, Trending identified 28 comments directed at children that were clearly against the site's guidelines.

The comments are shocking. Some of them are extremely sexually explicit. Others include the phone numbers of adults, or requests for videos to fulfil sexual fetishes. They were left on YouTube videos posted by young children and they are exactly the kind of material that should be immediately removed under YouTube's own rules - and in many cases reported to the authorities.

YouTube

YouTubeThe children in the videos appeared to be younger than 13 years old, the minimum age for registering an account on YouTube. The videos themselves did not have sexual themes, but showed children emulating their favourite YouTube stars by, for instance, reviewing toys or showing their "outfit of the day".

The explicit comments on these videos were passed on to the company using its form to report child endangerment - the same form that is available to general users.

Over a period of several weeks, five of the comments were deleted, but no action was taken against the remaining 23 until Trending contacted the company and provided a full list. All of the predatory accounts were then deleted within 24 hours.

You might also be interested in:

Members of the Trusted Flaggers programme told Trending that they felt their efforts in taking down such accounts and comments were not being fully supported by the company. They spoke, as a group, on condition of anonymity because of the nature of the work they do.

"We don't have access to the tools, technologies and resources a company like YouTube has or could potentially deploy," members of the programme told Trending. "So for example any tools we need, we create ourselves."

"There are loads of things YouTube could be doing to reduce this sort of activity, fixing the reporting system to start with. But for example, we can't prevent predators from creating another account and have no indication when they do, so we can take action."

New measures

YouTube has come under pressure recently because of the persistence of inappropriate and potentially illegal videos and other content on its site.

BBC Trending previously reported on spoofs of popular cartoons which contain disturbing and inappropriate content not suitable for children. The site recently announced new restrictions on the "creepy" videos.

Recent reports by The Times, Buzzfeed and other outlets have also highlighted disturbing videos both featuring children and targeted towards young people. And in August, Trending revealed a huge backlog of child endangerment reports made by the Trusted Flaggers themselves.

Since then, the Trusted Flaggers who spoke to Trending say more attention is paid to their reports and that most of their reports are being dealt with in days. But in part because of the shortcomings in the public reporting system, the group estimates that there are "between 50,000 to 100,000 active predatory accounts still on the platform".

Earlier in October, YouTube announced additional measures to crack down on disturbing videos and to protect children.

"In recent months, we've noticed a growing trend around content on YouTube that attempts to pass as family-friendly, but is clearly not," the company said in a blog post.

The measures include increasing enforcement, terminating channels that might endanger children, and removing ads from some videos.

The company also announced that starting this week it will disable commenting on videos of children that have attracted sexual or predatory comments.

How to protect children online

- Be aware of what your children are doing on the internet.

- Pay particular attention to comments being made on videos and sudden spikes in popularity for content posted by children online.

- The NSPCC has a series of guidelines about keeping children safe online

- They promote the acronym TEAM: Talk about staying safe online; Explore the online world together; Agree rules about what's OK and what's not; and Manage your family's settings and controls.

- There are more resources on the BBC Stay Safe site.

- In the UK, online grooming can be reported via the Click CEOP button.

The Children's Commissioner for England, Anne Longfield, described the findings as "very worrying".

"This is a global platform and so the company need to ensure they have a global response. There needs to be a company-wide response that absolutely puts children protection as a number one priority, and has the people and mechanisms in place to ensure that no child has been put in an unsafe position while they using the platform."

The National Crime Agency told Trending: "It is vital that online platforms used by children and young people have in place robust mechanisms and processes to prevent, identify and report sexual exploitation and abuse."

YouTube response

A YouTube spokesperson said: "We receive hundreds of thousands of flags of content every day and the vast majority of content flagged for violating our guidelines is reviewed in 24 hours.

"Content that endangers children is abhorrent and unacceptable to us.

"We have systems in place to take swift action on this content with dedicated policy specialists reviewing and removing flagged material around the clock, and terminating the accounts of those that leave predatory comments outright."

The company said that in the past week they've disabled comments on thousands of videos and shut down hundreds of accounts that have made predatory comments.

"We are committed to getting this right and recognise we need to do more, both through machine learning and by increasing human and technical resources."

Reporting by Elizabeth Cassin and Anisa Subedar

Blog by Mike Wendling

Also from Trending: The disturbing YouTube videos that are tricking children

CandyFamily/YouTube

CandyFamily/YouTubeThousands of videos on YouTube look like versions of popular cartoons but contain disturbing and inappropriate content not suitable for children. READ MORE

You can follow BBC Trending on Twitter @BBCtrending, and find us on Facebook. All our stories are at bbc.com/trending.