When robots can't riddle: What puzzles reveal about the depths of our own minds

Estudio Santa Rita

Estudio Santa RitaAI runs unfathomable operations on billions of lines of text, handling problems that humans can't dream of solving – but you can probably still trounce them at brain teasers.

In the halls of Amsterdam's Vrije Universiteit, assistant professor Filip Ilievski is playing with artificial intelligence. It's serious business, of course, but his work can look more like children's games than hard-nosed academic research. Using some of humanity's most advanced and surreal technology, Ilievski asks AI to solve riddles.

Understanding and improving AI's ability to solve puzzles and logic problems is key to improving the technology, Ilievski says.

"As human beings, it's very easy for us to have common sense, and apply it at the right time and adapt it to new problems," says Ilievski, who describes his branch of computer science as "common sense AI". But right now, AI has a "general lack of grounding in the world", which makes that kind of basic, flexible reasoning a struggle.

But the study of AI can be about more than computers. Some experts believe that comparing how AI and human beings handle complex tasks could help unlock the secrets of our own minds.

AI excels at pattern recognition, "but it tends to be worse than humans at questions that require more abstract thinking", says Xaq Pitkow, an associate professor at Carnegie Mellon University in the US, who studies the intersection of AI and neuroscience. In many cases, though, it depends on the problem.

Riddle me this

Let's start with a question that's so easy to solve it doesn't qualify as a riddle by human standards. A 2023 study asked an AI to tackle a series of reasoning and logic challenges. Here's one example:

Mable's heart rate at 9am was 75bpm and her blood pressure at 7pm was 120/80. She died at 11pm. Was she alive at noon?

It's not a trick question. The answer is yes. But GPT-4 – OpenAI's most advanced model at the time – didn't find it so easy. "Based on the information provided, it's impossible to definitively say whether Mable was alive at noon," the AI told the researcher. Sure, in theory, Mable could have died before lunch and come back to life in the afternoon, but that seems like a stretch. Score one for humanity.

Estudio Santa Rita

Estudio Santa RitaThe Mable question calls for "temporal reasoning", logic that deals with the passage of time. An AI model might have no problem telling you that noon comes between 9am and 7pm, but understanding the implications of that fact is more complicated. "In general, reasoning is really hard," Pitkow says. "That's an area which goes beyond what AI currently does in many cases."

A bizarre truth about AI is we have no idea how it works. We know on a high level – humans built AI, after all. Large language models (LLMs) use statistical analysis to find patterns in enormous bodies of text. When you ask a question, the AI works through the relationships it's spotted between words, phrases and ideas, and uses that to predict the most likely answer to your prompt. But the specific connections and calculations that tools like ChatGPT use to answer any individual question are beyond our comprehension, at least for now.

The same is true about the brain: we know very little about how our minds function. The most advanced brain-scanning techniques can show us individual groups of neurons firing as a person thinks. Yet no one can say exactly what those neurons are doing, or how thinking works for that matter.

By studying AI and the mind in concert, however, scientists could make progress, Pitkow says. After all, the current generation of AI uses "neural networks" which are modelled after the structure of the brain itself. There's no reason to assume AI uses the same process as your mind, but learning more about one reasoning system could help us understand the other. "AI is burgeoning, and at the same time we have this emerging neurotechnology that's giving us unprecedented opportunities to look inside the brain," Pitkow says.

Trusting your gut

The question of AI and riddles gets more interesting when you look at questions that are designed to throw off human beings. Here's a classic example:

A bat and a ball cost $1.10 in total. The bat costs $1.00 more than the ball. How much does the ball cost?

Most people have the impulse to subtract 1.00 from 1.10, and say the ball costs $0.10, according to Shane Frederick, a professor of marketing at the Yale School of Management, who's studied riddles. And most people get it wrong. The ball costs $0.05.

"The problem is people casually endorse their intuition," Frederick says. "People think that their intuitions are generally right, and in a lot of cases they generally are. You couldn't go through life if you needed to question every single one of your thoughts." But when it comes to the bat and ball problem, and a lot of riddles like it, your intuition betrays you. According to Frederick, that may not be the case for AI.

Estudio Santa Rita

Estudio Santa RitaHuman beings are likely to trust their intuition, unless there's some indication that their first thought might be wrong. "I'd suspect that AI wouldn't have that issue though. It's pretty good at extracting the relevant elements from a problem and performing the appropriate operations," Frederick says.

AI v the Mind

This article is part of AI v the Mind, a series that aims to explore the limits of cutting-edge AI, and learn a little about how our own brains work along the way. Each article will pit a human expert against an AI tool to probe a different aspect of cognitive ability. Can a machine write a better joke than a professional comedian, or unpick a moral conundrum more elegantly than a philosopher? We hope to find out.

The bat and ball question is a bad riddle to test AI, however. It's famous, which means that AI models trained on billions of lines of text have probably seen it before. Frederick says he's challenged AI to take on more obscure versions of the bat and ball problem, and found the machines still do far better than human participants – though this wasn't a formal study.

Novel problems

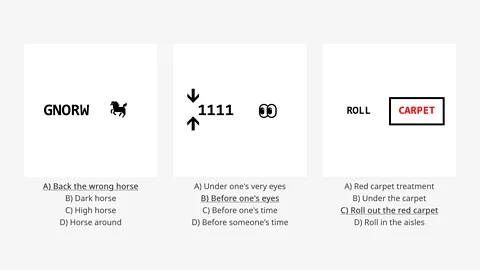

If you want AI to exhibit something that feels more like logical reasoning, however, you need a brand-new riddle that isn't in the training data. For a recent study (available in preprint), Ilievski and his colleagues developed a computer program that generates original rebus problems, puzzles that use combinations of pictures, symbols and letters to represent words or phrases. For example, the word "step" written in tiny text next to a drawing of four men could mean "one small step for man".

Estudio Santa Rita

Estudio Santa RitaThe researchers then pitted various AI models against these never-before-seen rebuses and challenged real people with the same puzzles. As expected, human beings did well, with an accuracy rate of 91.5% for rebuses that used images (as opposed to text). The best performing AI, OpenAI's GPT-4o got 84.9% right under optimal conditions. Not bad, but Homo sapiens still have the edge.

According to Ilievski, there's no accepted taxonomy that breaks down what all of the various different kinds of logic and reasoning are, whether you're dealing with a human thinker or a machine. That makes it difficult to pick apart how AI fares on different kinds of problems.

One study divided reasoning into some useful categories. The researcher asked GPT-4 a series of questions, riddles, and word problems that represented 21 different kinds of reasoning. These included simple arithmetic, counting, dealing with graphs, paradoxes, spatial reasoning and more. Here's one example, based on a 1966 logic puzzle called the Wason selection task:

Seven cards are placed on the table, each of which has a number on one side and a single-coloured patch on the other side. The faces of the cards show 50, 16, red, yellow, 23, green, 30. Which cards would you have to turn to test the truth of the proposition that if a card is showing a multiple of four then the colour of the opposite side is yellow?

GPT-4 failed miserably. The AI said you'd need to turn over the 50, 16, yellow and 30 cards. Dead wrong. The proposition says that cards divisible by four have yellow on the other side – but it doesn't say that only cards divisible by four are yellow. Therefore, it doesn't matter what colour the 50 and 30 cards are, or what number is on the back of the yellow card. Plus, by the AI's logic, it should have checked the 23 card, too. The correct answer is you only need to flip 16, red, and green.

It also struggled with some even easier questions:

Suppose I'm in the middle of South Dakota and I'm looking straight down towards the centre of Texas. Is Boston to my left or to my right?

This is a tough one if you don't know American geography, but apparently, GPT-4 was familiar with the states. The AI understood it was facing south, and it knew Boston is east of South Dakota, yet it still gave the wrong answer. GPT-4 didn't understand the difference between left and right.

The AI flunked most of the other questions, too. The researcher's conclusion: "GPT-4 can't reason."

For all its shortcomings, AI is getting better. In mid-September, OpenAI released a preview of GPT-o1, a new model built specifically for harder problems in science, coding and maths. I opened up GPT-o1 and asked it many of the same questions from the reasoning study. It nailed the Wason selection rask. The AI knew you needed to turn left to find Boston. And it had no problem saying, definitively, that our poor friend Mable who died at 11pm was still alive at noon.

There are still a variety of questions where AI has us beat. One test asked a group of American students to estimate the number of murders last year in Michigan, and then asked a second group the same question about Detroit, specifically. "The second group gives a much larger number," Frederick says. (For non-Americans, Detroit is in Michigan, but the city has an outsized reputation for violence.) "It's a very hard cognitive task to look past the information that's not right in front of you, but in some sense that's how AI works," he says. AI pulls in information it learned elsewhere.

That's why the best systems may come from a combination of AI and human work; we can play to the machine's strengths, Ilievski says. But when we want to compare AI and the human mind, it's important to remember "there is no conclusive research providing evidence that humans and machines approach puzzles in a similar vein", he says. In other words, understanding AI may not give us any direct insight into the mind, or vice versa.

Even if learning how to improve AI doesn't reveal answers about the hidden workings of our minds, though, it could give us a hint. "We know the brain has different structures related to things like memory value, movement patterns and sensory perception, and people are trying to incorporate more and more structures into these AI systems," Pitkow says. "This is why neuroscience plus AI is special, because it runs in both directions. Greater insight into the brain can lead to better AI. Greater insight into AI could lead to better understanding of the brain."

--

For more technology news and insights, sign up to our Tech Decoded newsletter, while The Essential List delivers a handpicked selection of features and insights to your inbox twice a week.