What is longtermism?

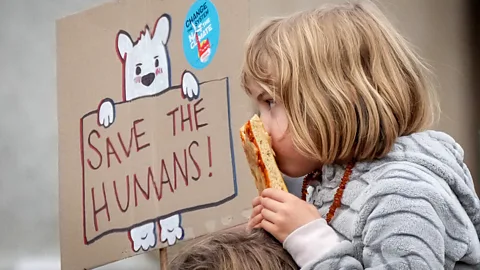

Filippo Monteforte/AFP/Getty Images

Filippo Monteforte/AFP/Getty ImagesThere is a strong moral reason to consider how the actions and decisions we take today affect the lives of huge numbers of future people, argues philosopher William MacAskill. Here he makes the case for longtermism.

Humanity, today, is in its adolescence. Most of a teenager’s life is still ahead of them, and their decisions can have lifelong effects. Similarly, most of humanity’s life lies ahead – an estimated 118 billion people have already lived, but vastly more people, perhaps thousands or even millions of times that number, are yet to be born.

And some of the decisions we make this century will impact the entire course of humanity's future.

Contemporary society does not appreciate this fact. To mature as a species, we need to embrace a perspective called longtermism – a way of thinking that differs greatly from the prevalent mindset today.

Longtermism is the view that positively influencing the long-term future is a key moral priority of our time. It's about taking seriously the sheer scale of the future, and how high the stakes might be in shaping it. It means thinking about the challenges we might face in our lifetimes that could impact civilisation’s whole trajectory, and taking action to benefit not just the present generation, but all generations to come.

The case for longtermism rests on the simple idea that future people matter. This is common sense. We care about the impacts of climate change, radioactive nuclear waste, and resource depletion not just because they affect our lives, but because they affect the lives of our grandchildren, and their grandchildren in turn. If you could prevent a genocide in a thousand years, the fact that "those people don't exist yet" would do nothing to justify inaction.

Many social movements have fought for greater recognition for disempowered members of society. Advocates of longtermism want to extend this recognition to future people, too. Just as we should care about the lives of people who are distant from us in space, we should care about people who are distant from us in time – those who live in the future.

This matters because the future could be vast. We have 300,000 years of humanity behind us, but how many years are still to come?

Christopher Furlong/Getty Images

Christopher Furlong/Getty ImagesCOMMENT & ANALYSIS

The author of this article, William MacAskill, is an associate professor of philosophy at the University of Oxford and is a co-founder of the Centre for Effective Altruism, Giving What We Can and 80,000 Hours. He is also the author of a new book on longtermism, What We Owe the Future.

If humans were a typical mammal species, we would have 700,000 years still to go. But we are not typical. We may destroy ourselves in the next few centuries, or we could last for hundreds of millions of years. We can't know which of these futures awaits us, but we know that humanity's life expectancy – the amount of time our species has the potential to live – is huge. (Read more about how long humanity can survive for.)

We may stand at history's very beginning. If we avoid catastrophe, almost everyone to ever exist is yet to come.

Future generations therefore have enormous moral importance. But what can we do to help them?

First, we can make sure that we have a future at all. And, second, we can help ensure that that future is good.

Ensuring civilisation's survival

The first step is reducing the risk of human extinction. If our actions this century cause us to go extinct, there is little uncertainty about the impact on future generations: our actions will have robbed them of their chance to live altogether. The potential for a flourishing future would be entirely lost.

One of the greatest threats comes from pandemics. The tragedy of Covid-19 has made us painfully aware of how harmful pandemics can be. Future pandemics could be even worse as we continue to intrude into natural habitats in ways that increase the risk of diseases crossing over from animals to humans. Advances in biotechnology are helping us develop vaccines to protect us from disease, but they also are giving us the power to create new pathogens, which could be used as bioweapons of unprecedented destructive power.

The community prediction platform Metaculus, which uses the collective wisdom of its members to forecast real-world events, currently puts the risk of a pandemic that kills over 95% of the world's population this century at almost 1%. If you're reading this in a relatively well-off country, you are more likely to die in a pandemic than you are from fires, drowning, and murder combined. It's as if humanity as a whole is about to take on the death-risk from skydiving – 1,000 times in a row. For such grave stakes, this risk is far too great.

We can act now to reduce these risks. Kevin Esvelt is a professor of biology at the Massachusetts Institute of Technology (MIT) in the US, and one of the developers of CRISPR gene drive technology. Gravely concerned by catastrophic risk from engineered pathogens, he founded the Nucleic Acid Observatory, which aims to constantly monitor wastewater for new diseases so that we can detect and respond to pandemics as soon as they arise. This technique was recently used to find traces of polio in the UK.

Esvelt also has championed research into far-ultraviolet (far-UVC) lighting. Promising early research suggests that some forms of far-UVC light can sterilise a room by inactivating microbial diseases, without harming people in the process. If we can bring down the cost of far-UVC lighting, and install it in buildings around the world, we can both drastically reduce pandemic risk and make most respiratory diseases a thing of the past. (Read more about how UVC can combat diseases.)

Other groups are focused on pandemic preparedness, too. The recently-established Oxford Pandemic Sciences Institute is working on speeding up the evaluation of vaccines for new outbreaks. Drug start-up Alvea is trying to quickly produce an affordable, shelf-stable Covid vaccine that can cheaply vaccinate everyone, rich and poor alike. They plan to use their platform to rapidly develop vaccines for future pandemics.

These efforts show that we really can help ensure civilisation's survival – while reaping enormous benefits for the present generation, too.

Trajectory changes

Ensuring the survival of civilisation isn't the only way to help future generations. We can also help to ensure that their civilisation flourishes.

One major way is by improving the values that guide society. Historical activists and thinkers have already achieved long-lasting moral improvements through campaigns for democracy, rights for people of colour, and women's suffrage. We can build on this progress to leave an even better world for our children and grandchildren. We can foster attitudes and institutions that give serious moral consideration to every person and sentient being.

Robyn Beck/AFP/Getty Images

Robyn Beck/AFP/Getty ImagesBut this progress is by no means guaranteed. Future technology such as advanced artificial intelligence (AI) could give bad political actors far greater ability to entrench their power. The 20th Century saw totalitarian regimes rise out of democracies, and if that happened again, the freedom and equality we have gained over the last century could be lost. In the worst case, the future might not be controlled by humans at all – we could be outcompeted by superintelligent AI systems whose goals conflict with our own.

Indeed, several indicators suggest the possibility that AI systems may surpass human capabilities in a matter of decades, and it is far from clear that the transition to such a transformative, general-purpose technology will go well. People inspired by longtermism are therefore working to ensure that AI systems are honest and safe. For example, Stuart Russell is a professor of computer science at the University of California, Berkeley, and the co-author of the most widely-read and influential AI textbook. He cofounded the Centre for Human-Compatible AI, with the aim of aligning advanced artificial intelligence with human goals. Researcher Katja Grace runs AI Impacts, which aims to better understand when we might develop human-level artificial intelligence, and what might happen when we do.

There have been many ways to positively shape the future throughout history: from small acts like planting forests and recording information, to far-sighted moral advocacy whose effects have lasted through the present day.

However, the 21st Century could present a time of truly unprecedented influence over our destiny. The more power we have over our environment and over each other, the more power we have to throw history off course. If humanity is like a teenager, then she is reckless, driving at a faster speed than she can control.

Longtermism in practice

Longtermism is more than just an abstract idea. It has its origins in the effective altruism movement: a philosophy and community that tries to figure out how we can do as much good as possible with our time and money, and then takes action to put those ideas into practice.

The idea of longtermism has led to a tremendous upsurge in action dedicated to helping future generations. Hundreds of people have pledged part of their income, choosing to live on less so that they can donate to causes that benefit future generations. Major foundations, such as Open Philanthropy and the Future Fund, are making future-oriented causes a core philanthropic priority. Young people around the world are using longtermism to inform their career choices.

Organisations, too, are orienting towards the long term across a range of areas. Our World in Data curates and presents information on the most important big-picture issues of our time. Community prediction platform Metaculus aggregates the views of thousands of volunteers to create testable forecasts of future events. The United Nations released an agenda with plans to foreground future generations through new representatives, reports, and declarations – all to be discussed in a "Summit of the Future" in 2023.

There's also a vibrant research community. Longtermism is still a nascent idea, and there's an enormous amount we don't know. How quickly will we move from sub-human to greater-than-human level artificial intelligence, and what should we expect to happen next? How likely is civilisation to recover after collapse? What drives moral progress, and how can we prevent the lock-in of bad values? How can we most effectively take action on the biggest risks we face?

Organisations that work on these topics include the Future of Humanity Institute and Global Priorities Institute at the University of Oxford, where I am chair of the advisory board, alongside the Centre for the Study of Existential Risks at Cambridge, the Stanford Existential Risks Initiative, and the Future of Life Institute.

Back to the present

Does advocating for future generations mean neglecting the interests of those alive today? Not at all. At the moment, only a tiny fraction of society's time and attention is spent explicitly trying to promote a positive long-term future. I don't know how much we should be spending, but it's certainly more than we currently do. As my colleague Toby Ord points out, the Biological Weapons Convention – the single international body tasked with prohibiting the proliferation of bioweapons – has an annual budget of less than the average McDonald's restaurant. Given how neglected and ignored future generations' interests currently are, even small increases in society's concern for its future could have a transformative impact.

What's more, action for the long term has benefits in the short term, too. As we've seen, preventing the next pandemic stands to save lives in both the present and the future. Innovation in clean energy helps mitigate climate change, but it also reduces the death toll of the 3.6 million people who die every year from fossil fuel-related air pollution. Much the same could be said for other efforts that stand out as longtermist priorities – such as efforts to promote and improve democracy, to reduce the risk of a third world war, or to improve our ability to forecast new catastrophes before they arise.

Timothy A Clary/Getty Images

Timothy A Clary/Getty ImagesWe don't often think about the vast future that may lie before us. We rarely worry about catastrophes that could imperil civilisation, or how new technologies could result in perpetual dystopia. And we almost never reflect on just how good life might become: how we might be able to give our great-great-grandchildren lives of great joy and accomplishment, free from stress and unnecessary suffering.

But we should think about such things. After all, the future is just as real as the present or the past. We overlook it merely because it’s out of sight, and therefore out of mind.

In our lifetimes, we will face challenges – like the development of advanced artificial intelligence, and the threat of bioweapons used in a third world war – that could prove pivotal for the entire future of the human race.

It's up to us to make sure we respond to these challenges wisely. If we do, then we can leave our descendants a world that is beautiful and just – one that can flourish for millions of years to come.

* William MacAskill is an associate professor of philosophy at the University of Oxford and a trustee for the Centre for Effective Altruism. His book on longtermism, What We Owe the Future, is due to be published in September 2022.

--