The truth about the Turing Test

The Weinstein Company

The Weinstein CompanyWhen bots can pass for human in conversation, it will be a milestone in AI, but not necessarily the significant moment that sci-fi would have us believe. Phillip Ball explores the strengths and limitations of the Turing Test.

Alan Turing made many predictions about artificial intelligence, but one of his lesser known may sound familiar to those who have heard Stephen Hawking or Elon Musk warn about AI’s threat in 2015. “At some stage… we should have to expect the machines to take control,” he wrote in 1951.

He not only seemed quite sanguine about the prospect but possibly relished it: his friend Robin Gandy recalled that when he read aloud some of the passages in his seminal ‘Turing Test’ paper, it was “always with a smile, sometimes with a giggle”. That, at least, gives us reason to doubt the humourless portrayal of Turing in the 2014 biopic The Imitation Game.

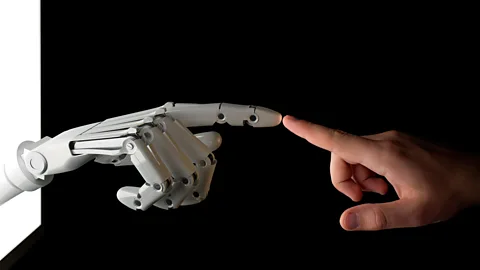

Turing has influenced how we view AI ever since – the Turing Test has often been held up as a vital threshold AI must pass en route to true intelligence. If an AI machine could fool people into believing it is human in conversation, he proposed, then it would have reached an important milestone.

What's more, the Turing Test has been referenced many times in popular-culture depictions of robots and artificial life – perhaps most notably inspiring the polygraph-like Voight-Kampff Test that opened the movie Blade Runner. It was also namechecked in Alex Garland’s Ex Machina.

Getty Images

Getty ImagesBut more often than not, these fictional representations misrepresent the Turing Test, turning it into a measure of whether a robot can pass for human. The original Turing Test wasn’t intended for that, but rather, for deciding whether a machine can be considered to think in a manner indistinguishable from a human - and that, even Turing himself discerned, depends on which questions you ask.What’s more, there are many other aspects of humanity that the test neglects – and that’s why several researchers have devised new variants of the Turing Test that aren’t about the capacity to hold a plausible conversation.

Take game-playing, for example. To rival or surpass human cognitive powers in something more sophisticated than mere number-crunching, Turing thought that chess might be a good place to start – a game that seems to be characterised by strategic thinking, perhaps even invention. After Deep Blue’s victory over World Chess Champion Garry Kasparov in 1997, we have clearly crossed that particular threshold. And we now have algorithms that are all but invincible (in the long term) for bluffing games like poker – although this turns out to be less psychological than you might think, and more a matter of hard maths.

What about something more creative and ineffable, like music? Machines can fool us there too. There is now a music-composing computer called Iamus, which produces work sophisticated enough to be deemed worthy of attention by professional musicians. Iamus’s developer Francisco Vico of the University of Malaga and his colleagues carried out a kind of Turing Test by asking 250 subjects – half of them professional musicians – to listen to one of Iamus’s compositions and music in a comparable style by human composers, and decide which is which. “The computer piece raises the same feelings and emotions as the human one, and participants can’t distinguish them”, says Vico. “We would have obtained similar results by flipping coins.”Some say that computer poetry has passed the test too, though one can’t help thinking that this says more about the discernment of the judges. Consider the line: “you are a sweet-smelling diamond architecture”.

Then there’s the “Turing touch test”. Turing himself claimed that even if a material were ever to be found that mimicked human skin perfectly, there was little reason to try to make a machine more human by giving it artificial flesh. Yet the robot Ava in Ex Machina clearly found it worthwhile, intent as she was on passing unnoticed within normal human society.

Getty Images

Getty ImagesA somewhat more prosaic reason to devise new varieties of Turing Tests is not to pass off a machine as human but simply to establish if an AI or robotic system is up to scratch. Computer scientist Stuart Geman of Brown University in Providence, Rhode Island, and collaborators at the Johns Hopkins University in Baltimore, recently described a “visual Turing Test” for a computer-vision system that attempted to see if the system could extract meaningful relationships and narratives from a scene in the way that we can, rather than simply identifying specific objects. Such a capability is becoming increasingly relevant for surveillance systems and biometric sensing.

Getty Images

Getty ImagesAs for the original Turing Test, its future is likely to be online. Already, online gamers can find themselves unsure if they are competing against a human or a “gaming bot” – in fact, some players actually prefer to play against bots (which are assumed to be less likely to cheat). You can find yourself talking to bots in chatrooms and when making online queries: some are used as administrators to police the system, others are a cheap way of dealing with routine enquiries. Some might be there merely to keep us company – a function for which we can probably anticipate an expanding future market, as Spike Jonze’s 2013 movie Her cleverly explored.

Getty Images

Getty ImagesEugene: The server is temporarily unable to service your request due to maintenance downtime or capacity problems. Please try again later.

I guess we won’t be needing Blade Runners just yet.